There are several different ways to describe the human genome but the most common one focuses on the DNA content of the nucleus in eukaryotes; it does not include mitochondrial and chloroplast DNA . The standard reference genome sequence consists of one copy of each of the 22 autosomes plus one copy of the X chromosome and one copy of the Y chromosome. That's the definition of genome that I will use here.

The earliest direct estimates of the size of human genome relied on Feulgen staining. The stain is quantitative so a properly conducted procedure gives you the weight of DNA in the nucleus. According to these measurements, the standard diploid content of the human nucleus is 7.00 pg and the haploid content is 3.50 pg [See Ryan Gregory's Animal Genome Size Database].

Since the structure of DNA is known, we can estimate the average mass of a base pair. It is 650 daltons, or 1086 x 10-24 g/bp. The size of the human genome in base pairs can be calculated by dividing the total mass of the haploid genome by the average mass of a base pair.

3.5 pg/1086 x 10-12 pg/bp = 3.2 x 109 bp

The textbooks settled on this value of 3.2 Gb by the late 1960s since it was confirmed by reassociation kinetics. According to C0t analysis results from that time, roughly 10% of the genome consists of highly repetitive DNA, 25-30% is moderately repetitive and the rest is unique sequence DNA (Britten and Kohne, 1968).

A study by Morton (1991) looked at all of the estimates of genome size that had been published to date and concluded that the average size of the haploid genome in females is 3,227 Mb. This includes a complete set of autosomes and one X chromosome. The sum of autosomes plus a Y chromosome comes to 3,122 Mb. The average is about 3,200 which was similar to most estimates.

These estimates mean that the standard reference genome should be more than 3,227 Mb since it has to include all of the autosomes plus an X and a Y chromosome. The Y chromosome is about 60 Mb giving a total estimate of 3,287 Mb or 3.29 Gb.

The standard reference genome

The common assumption about the size of the human genome in the past two decades has dropped to about 3,000 Mb because the draft sequence of the human genome came in at 2,800 Mb and the so-called "finished" sequence was still considerably less than 3,200 Mb. Most people didn't realize that there were significant gaps in the draft sequence and in the "finished" sequence so the actual size is larger than the amount of sequence. The latest estimate of the size of the human genome from the Genome Reference Consortium is 3,099,441038 bp (3,099 Mb) (Build 38, patch 14 = GRCh38.p14 (February, 2022)). This includes an actual sequence of 2,948,318,359 bp and an estimate of the size of the remaining gaps. The total size estimates have been steadily dropping from >3.2 Gb to just under 3.1 Gb.

The telomere-to-telomere assembly

The first complete sequence of a human genome was published in April, 2022 [The complete human genome sequence (2022)]. This telomere-telomere (T2T) assembly of every autosome and one X chromosome came in at 3,055 Mb (3.06 Gb). If you add in the Y chromosome, it comes to 3.12 Gb, which is very similar to the estimate for GRCh38.p14 (3.10 Gb). Based on all the available data, I think it's safe to say that the size of the human genome is about 3.1 Gb and not the 3.2 Gb that we've been using up until now.

Variations in genome size

Everything comes with a caveat and human genome size is no exception. The actual size of your human genome may be different than mine and different from everyone else's, including your close relatives. This is because of the presence or absence of segmental duplications that can change the size a human genome by as much as 200 Mb. It's possible to have a genome that's smaller than 3.0 Gb or one that's larger than 3.3 Gb without affecting fitness.

Nobody has figured out a good way to incorporate this genetic variation data into the standard reference genome by creating a sort of pan genome such as those we see in bacteria. The problem is that more and more examples of segmental duplications (and deletions) are being discovered every year so annotating those changes is a nightmare. In fact, it's a major challenge just to reconcile the latest telomere-to-telomere sequence (T2T-CHM13) and the current standard reference genome [What do we do with two different human genome reference sequences?].

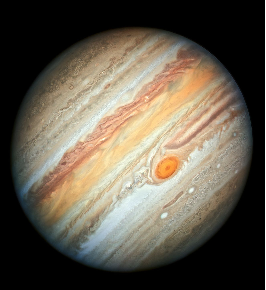

[Image Credit: Wikipedia: Creative Commons Attribution 2.0 Generic license]

Britten, R. and Kohne, D. (1968) Repeated Sequences in DNA. Science 161:529-540. [doi: 10.1126/science.161.3841.529]

Morton, N.E. (1991) Parameters of the Human Genome. Proc. Natl. Acad. Sci. (USA) 88:7474-7476 [free article on PubMed Central]

International Human Genome Sequencing Consortium (2004) Finishing the euchromatic sequence of the human genome. Nature 431:931-945 [doi:10.1038/nature03001]