So many books ... so little time.

More Recent Comments

Sunday, November 20, 2022

Saturday, November 19, 2022

How many enhancers in the human genome?

In spite of what you might have read, the human genome does not contain one million functional enhancers.

The Sept. 15, 2022 issue of Nature contains a news article on "Gene regulation" [Two-layer design protects genes from mutations in their enhancers]. It begins with the following sentence.

The human genome contains only about 20,000 protein-coding genes, yet gene expression is controlled by around one million regulatory DNA elements called enhancers.

Sandwalk readers won't need to be told the reference for such an outlandish claim because you all know that it's the ENCODE Consortium summary paper from 2012—the one that kicked off their publicity campaign to convince everyone of the death of junk DNA (ENCODE, 2012). ENCODE identified several hundred thousand transcription factor (TF) binding sites and in 2012 they estimated that the total number of base pairs invovled in regulating gene expression could account for 20% of the genome.

How many of those transcription factor binding sites are functional and how many are due to spurious binding to sites that have nothing to do with gene regulation? We don't know the answer to that question but we do know that there will be a huge number of spurious binding sites in a genome of more than three billion base pairs [Are most transcription factor binding sites functional?].

The scientists in the ENCODE Consortium didn't know the answer either but what's surprising is that they didn't even know there was a question. It never occured to them that some of those transcription factor binding sites have nothng to do with regulation.

Fast forward ten years to 2022. Dozens of papers have been published criticizing the ENCODE Consortium for their stupidity lack of knowledge of the basic biochemical properties of DNA binding proteins. Surely nobody who is interested in this topic believes that there are one million functional regulatory elements (enhancers) in the human genome?

Wrong! The authors of this Nature article, Ran Elkon at Tel Aviv University (Israel) and Reuven Agami at the Netherlands Cancer Institute (Amsterdam, Netherlands), didn't get the message. They think it's quite plausible that the expression of every human protein-coding gene is controlled by an average of 50 regulatory sites even though there's not a single known example any such gene.

Not only that, for some reason they think it's only important to mention protein-coding genes in spite of the fact that the reference they give for 20,000 protein-coding genes (Nurk et al., 2022) also claims there are an additional 40,000 noncoding genes. This is an incorrect claim since Nurk et al. have no proof that all those transcribed regions are actually genes but let's play along and assume that there really are 60,000 genes in the human genome. That reduces the average number of enhancers to an average of "only" 17 enhancers per gene. I don't know of a single gene that has 17 or more proven enhancers, do you?

Why would two researchers who study gene regulation say that the human genome contains one million enhancers when there's no evidence to support such a claim and it doesn't make any sense? Why would Nature publish this paper when surely the editors must be aware of all the criticism that arose out of the 2012 ENCODE publicity fiasco?

I can think of only two answers to the first question. Either Elkon and Agami don't know of any papers challenging the view that most TF binding sites are functional (see below) or they do know of those papers but choose to ignore them. Neither answer is acceptable.

I think that the most important question in human gene regulation is how much of the genome is devoted to regulation. How many potential regulatory sites (enhancers) are functional and how many are spurious non-functional sites? Any paper on regulation that does not mention this problem should not be published. All results have to interpreted in light of conflicting claims about function.

Here are some example of papers that raise the issue. The point is not to prove that these authors are correct - although they are correct - but to show that there's a controvesy. You can't just state that there are one million regulatory sites as if it were a fact when you know that the results are being challenged.

"The observations in the ENCODE articles can be explained by the fact that biological systems are noisy: transcription factors can interact at many nonfunctional sites, and transcription initiation takes place at different positions corresponding to sequences similar to promoter sequences, simply because biological systems are not tightly controlled." (Morange, 2014)"... ENCODE had not shown what fraction of these activities play any substantive role in gene regulation, nor was the project designed to show that. There are other well-studied explanations for reproducible biochemical activities besides crucial human gene regulation, including residual activities (pseudogenes), functions in the molecular features that infest eukaryotic genomes (transposons, viruses, and other mobile elements), and noise." (Eddy, 2013)

"Given that experiments performed in a diverse number of eukaryotic systems have found only a small correlation between TF-binding events and mRNA expression, it appears that in most cases only a fraction of TF-binding sites significantly impacts local gene expression." (Palazzo and Gregory, 2014)

One surprising finding from the early genome-wide ChIP studies was that TF binding is widespread, with thousand to tens of thousands of binding events for many TFs. These number do not fit with existing ideas of the regulatory network structure, in which TFs were generally expected to regulate a few hundred genes, at most. Binding is not necessarily equivalent to regulation, and it is likely that only a small fraction of all binding events will have an important impact on gene expression. (Slattery et al., 2014)

Detailed maps of transcription factor (TF)-bound genomic regions are being produced by consortium-driven efforts such as ENCODE, yet the sequence features that distinguish functional cis-regulatory sites from the millions of spurious motif occurrences in large eukaryotic genomes are poorly understood. (White et al., 2013)

One outstanding issue is the fraction of factor binding in the genome that is "functional", which we define here to mean that disturbing the protein-DNA interaction leads to a measurable downstream effect on gene regulation. (Cusanovich et al., 2014)

... we expect, for example, accidental transcription factor-DNA binding to go on at some rate, so assuming that transcription equals function is not good enough. The null hypothesis after all is that most transcription is spurious and alterantive transcripts are a consequence of error-prone splicing. (Hurst, 2013)

... as a chemist, let me say that I don't find the binding of DNA-binding proteins to random, non-functional stretches of DNA surprising at all. That hardly makes these stretches physiologically important. If evolution is messy, chemistry is equally messy. Molecules stick to many other molecules, and not every one of these interactions has to lead to a physiological event. DNA-binding proteins that are designed to bind to specific DNA sequences would be expected to have some affinity for non-specific sequences just by chance; a negatively charged group could interact with a positively charged one, an aromatic ring could insert between DNA base pairs and a greasy side chain might nestle into a pocket by displacing water molecules. It was a pity the authors of ENCODE decided to define biological functionality partly in terms of chemical interactions which may or may not be biologically relevant. (Jogalekar, 2012)

Nurk, S., Koren, S., Rhie, A., Rautiainen, M., Bzikadze, A. V., Mikheenko, A., et al. (2022) The complete sequence of a human genome. Science, 376:44-53. [doi:10.1126/science.abj6987]

The ENCODE Project Consortium (2012) An integrated encyclopedia of DNA elements in the human genome. Nature, 489:57-74. [doi: 10.1038/nature11247]

Academic workers on strike at University of California schools

Graduate students, postdocs, and other "academic workers" are on strike for higher wages and better working conditions at University of California schools but it's very difficult to understand what's going on.

Several locals of the United Auto Workers union are on strike. The groups include Academic Researchers, Academic Student Employees (ASEs), Postdocs, and Student Researchers. The list of demands can be found on the UAW website: UAW Bargaining Highlights (All Units).

Here's the problem. At my university, graduate students can make money by getting a position as a TA (teaching assistant). This is a part-time job at an hourly rate. This may be a major source of income for humanities students but for most science students it's just a supplement to their stipend. The press reports on this strike keep referring to a yearly income and they make it sound like part-time employment as a TA should pay a living wage. For example, a recent Los Angeles Times article says,

The workers are demanding a base salary of $54,000 for all graduate student workers, child-care subsidies, enhanced healthcare for dependents, longer family leave, public transit passes and lower tuition costs for international scholars. The union said the workers earn an average current pay of about $24,000 a year.

I don't understand this concept of "base salary." In my experience, most TAs work part time. If they were paid $50 per hour then they would have to work about 30 hours per week over two semesters in order to earn $54,000 per year. That doesn't seem to leave much time for working on a thesis. Perhaps it includes a stipend that doesn't require teaching?

Our graduate students are paid a living allowance (currently about $28,000 Cdn) and their tuition and fees are covered by an extra $8,000. Most of them don't do any teaching. Almost all of this money comes from research grants and not directly from the university.

The University of California system seems to be very different from the one I'm accustomed to. Is the work of TAs obvious to most Americans? Do you understand the issues?

I also don't get the situation with postdocs. The union is asking for a $70,000 salary for postdocs and the university is offering an 8% increase in the first year and smaller increases in subsequent years. In Canada, postdocs are mostly paid from research grants and not from university funds. The average postdoc salary at the University of Toronto is $51,000 (Cdn) but the range is quite large ($40K - $100K). I don't think the University of Toronto can dictate to PIs the amount of money that they have to pay a postdoc but it does count them as employees and ensures that postdocs have healthcare and suitable working conditions. These postdocs are members of a union (CUPE 3902) and there is a minimum stipend of $36,000 (Cdn).

Can someone explain the situation at the University of California schools? Are they asking for a minimum salary of $70,000 (US) ($93,700 Cdn)? Will PIs have to pay postdocs more from their research grants if the union wins a wage increase but the postdocs are already earning more than 70,000?

It's all very confusing and the press doesn't seem to have a good handle on the situation.

Note: I know that the union doesn't expect the university to meet it's maximum demands. I'm sure they will settle for something less. That's not the point I'm trying to make. I'm just trying to understand how graduate students and postdocs are paid in University of California schools.

Friday, November 18, 2022

Higher education for all?

I discuss a recent editorial in Science that advocates expanding university education in order to prepare students for jobs.

I believe that the primary goal of a university education is to teach students how to think (critical thinking). This goal is usually achieved within the context of an in-depth study in a particular field such as history, English literature, geology, or biochemistry. The best way of achieving that goal is called student-centered learning. It involves, among other things, classes that focus on discussion and debate under the guidance of an experienced teacher.

Universities and colleges also have job preparation programs such as business management, medicine, computer technology, and, to a lesser extent, engineering. These programs may, or may not, teach critical thinking (usually not).

About 85% of students who enter high school will graduate. About 63% of high school graduates go to college or university. The current college graduation rate is 65% (within six years). What this means is that for every 100 students that begin high school roughly 35 will graduate from college.

Now let's look at an editorial written by Marcia McNutt, President of the United States National Academy of Sciences. The editorial appears in the Nov. 11 issue of Science [Higher education for all]. She begins by emphasizing the importance of a college degree in terms of new jobs and the wealth of nations.

Currently, 75% of new jobs require a college degree. Yet in the US and Europe, only 40% of young adults attend a 2-year or 4-year college—a percentage that has either not budged or only modestly risen in more than two decades— despite a college education being one of the proven ways to lift the socioeconomic status of underprivileged populations and boost the wealth of nations.

There's no question that well-educated graduates will contribute to society in many ways but there is a question about what "well-educated" really means. Is it teaching specific jobs skills or is it teaching students how to think? I vote for teaching critical thinking and not for job training. I think that creating productive citizens who can fill a variety of different jobs is a side-benefit of preparing them to cope with a complex society that requires critical thinking. I don't think my view is exactly the same as Marcia McNutt's because she emphasizes training as a main goal of college education.

Universities, without building additional facilities, could expand universal and life-long access to higher education by promoting more courses online and at satellite community-college campuses.

Statements like that raise two issues that don't get enough attention. The first one concerns the number of students who should graduate from college in an ideal world. What is that number and at what stage should it be enhanced? Should here be more high school graduates going to college? If so, does that require lowering the bar for admission or is the cost of college the main impediment? Is there a higher percentage of students entering college in countries with free, or very low, tuition? Should there be more students graduating? If so, one easy way to do that is to make university courses easier. Is that what we want?

The question that's never asked is what percentage of the population is smart enough to get a college degree? Is it much higher than 40%?

The second issue concerns the quality of education. The model that I suggested above is not consistent with online courses and there's a substantial number of papers in the pedagogical literature showing the student centered education doesn't work very well online. Does that mean that we should adopt a different way of teaching in order to make college education more accessible? If so, at what cost?

McNutt gives us an example of the kind of change she envisages.

At Colorado College, students complete a lab science course in only 4 weeks, attending lectures in the morning and labs in the afternoon. This success suggests that US universities could offer 2-week short courses that include concentrated, hands-on learning and teamwork in the lab and the field for students who already mastered the basics through online lectures. Such an approach is more common in European institutions of higher education and would allow even those with full-time employment elsewhere to advance their skills during vacations or employer-supported sabbaticals for the purpose of improving the skills of the workforce. Opportunities abound for partnerships with industry for life-long learning. The availability of science training in this format could also be a boon for teachers seeking to fill gaps in their science understanding.

This is clearly a form of college education that focuses on job skills and even goes as far as suggesting that industry could be a "partner" in education. (As an aside, it's interesting that government employers, schools, and nonprofits are never asked to be partners in education even though they hire a substantial number of college graduates.)

Do you agree that the USA should be expanding the number of students who graduate from college and do you agree that the goal is to give them the skills needed to get a job?

Tuesday, November 08, 2022

Science education in an age of misinformation

I just read an annoying article in Boston Review: The Inflated Promise of Science Education. It was written by Catarina Dutilh Novaes, a Professor of Philosophy, and Silvia Ivani, a teaching fellow in philosophy. Their main point was that the old-fashioned way of teaching science has failed because the general public mistrusts scientists. This mistrust stems, in part, from "legacies of scientific or medical racism and the commercialization of biomedical science."

The way to fix this, according to the authors, is for scientists to address these "perceived moral failures" by engaging more with society.

Clearly, scientific education ought to mean the implanting of a rational, sceptical, experimental habit of mind. It ought to mean acquiring a method – a method that can be used on any problem that one meets – and not simply piling up a lot of facts."... science should be done with and for society; research and innovation should be the product of the joint efforts of scientists and citizens and should serve societal interests. To advance this goal, Horizon 2020 encouraged the adoption of dialogical engagement practices: those that establish two-way communication between experts and citizens at various stages of the scientific process (including in the design of scientific projects and planning of research priorities)."

George Orwell

This is nonsense. It has nothing to do with science education; instead, the authors are focusing on policy decisions such as convincing people to get vaccinations.

The good news is that the Boston Review article links to a report from Stanford University that's much more intelligent: Science Education in an Age of Misinformation. The philosophers think that this report advocates "... a well-meaning but arguably limited approach to the problem along the lines of the deficit model ...." where "deficit model refers to a mode of science communication where scientists just dispense knowledge to the general public who are supposed to accept it uncritically.

I don't know of very many science educators who think this is the right way to teach. I think the prevailing model is to teach the nature of science (NOS) [The Nature of Science (NOS)]. That requires educating students and the general public about the way science goes about creating knowledge and why evidence-based knowledge is reliable. It's connected to teaching critical thinking, not teaching a bunch scientific facts. The "deficit model" is not the current standard in science education and it hasn't been seriously defended for decades.

"Appreciating the scientific process can be even more important than knowing scientific facts. People often encounter claims that something is scientifically known. If they understand how science generates and assesses evidence bearing on these claims, they possess analytical methods and critical thinking skills that are relevant to a wide variety of facts and concepts and can be used in a wide variety of contexts.”National Science Foundation, Science and Technology Indicators, 2008

An important part of the modern approach as described in the Stanford report is teaching students (and the general public) how to gather information and determine whether or not it's reliable. That means you have to learn how to evalute the reliabiltiy of your sources and whether you can trust those who claim to be experts. I taught an undergraduate course on this topic for many years and I learned that it's not easy to teach the nature of science and critical thinking.

The Stanford Report is about the nature of science (NOS) model and how to implement it in the age of social media. Specifically, it's about teaching ways to evaluate your sources when you are inundated with misinformation.

The main part of this approach is going to seem controversial to many because it emphasizes the importance of experts and there's a growing reluctance in our society to trust experts. That's what the Boston Globe article was all about. The solutions advocated by the authors of that article are very different than the ones presented in the Sanford report.

The authors of the Standford report recognize that there's a widepread belief that well-educated people can make wise decisions based entirely on their own knowledge and judgement, in other words, that they can be "intellectually independent." They reject that false belief.

The ideal envisioned by the great American educator and philosopher John Dewey—that it is possible to educate students to be fully intellectually independent—is simply a delusion. We are always dependent on the knowledge of others. Moreover, the idea that education can educate independent critical thinkers ignores the fact that to think critically in any domain you need some expertise in that domain. How then, is education to prepare students for a context where they are faced with knowledge claims based on ideas, evidence, and arguments they do not understand?

The goal of science education is to teach students how to figure out which source of information is supported by the real experts and that's not an easy task. It seems pretty obvious that scientists are the experts but not all scientists are experts so how do you tell the difference between science quacks and expert scientists?

The answer requires some knowledge about how science works and how scientists behave. The Stanford reports says that this means acquiring an understanding of "science as a social practice." I think "social practice" is bad choice of terms and I would have preferred that they stick with "nature of science" but that was their choice."

The mechanisms for recognizing the real experts relies on critical thinking but it's not easy. Two of the lead authors1 of the Stanford Report published a short synopsis in Science last month (October 2022) [https://doi.org/10.1126/science.abq8-93]. Their heuristic is shown on the right.

The idea here is that you can judge the quality of scientific information by questioning the credentials of the authors. This is how "outsiders" can make judgements about the quality of the information without being experts themselves. The rules are pretty good but I wish there had been a bit more on "Unbiased scientific information" as a criterion. I think that you can make a judgement based on whether the "experts" take the time to discuss alternative hypotheses and explain the positions of those who disagree with them but this only applies to genuine scientific controversies and if you don't know that there's a controversy then you have no reason to apply this filter.

For example, if a paper is telling you about the wonderful world of regulatory RNAs and points out that there are 100,000 newly discovered genes for these RNAs, you would have no reason to question that if the scientists have no conflict of interest and come from prestigious universities. You would have to reply on the reviewers of the paper, and the journal, to insist that alternative explanations (e.g. junk RNA) were mentioned. That process doesn't always work.

There's no easy way to fix that problem. Scientists are biased all the time but outsiders (i.e. non-experts) have no way of recognizing bias. I used to think that we could rely on science journalists to alert us to these biases and point out that the topic is controversial and no consensus has been reached. That didn't work either.

At least the heuristic works some of the time so we should make sure we teach it to students of all ages. It would be even nicer if we could teach scientists how to be credible and how to recognize their own biases.

1. The third author is my former supervisor, Bruce Alberts, who has been interested in science education for more than fifty years. He did a pretty good job of educating me! :-)

Saturday, November 05, 2022

Nature journalist is confused about noncoding RNAs and junk

Nature Methods is one of the journals in Nature Portfolio published by Springer Nature. Its focus is novel methods in the life sciences.

The latest issue (October, 2022) highlights the issues with identifying functional noncoding RNAs and the editorial, Decoding noncoding RNAs, is quite good—much better than the comments in other journals. Here's the final paragraph.

Despite the increasing prominence of ncRNA, we remind readers that the presence of a ncRNA molecule does not always imply functionality. It is also possible that these transcripts are non-functional or products from, for example, splicing errors. We hope this Focus issue will provide researchers with practical advice for deciphering ncRNA’s roles in biological processes.

However, this praise is mitigated by the appearance of another article in the same journal. Science journalist, Vivien Marx has written a commentary with a title that was bound to catch my eye: How noncoding RNAs began to leave the junkyard. Here's the opening paragraph.

Junk. In the view of some, that’s what noncoding RNAs (ncRNAs) are — genes that are transcribed but not translated into proteins. With one of his ncRNA papers, University of Queensland researcher Tim Mercer recalls that two reviewers said, “this is good” and the third said, “this is all junk; noncoding RNAs aren’t functional.” Debates over ncRNAs, in Mercer’s view, have generally moved from ‘it’s all junk’ to ‘which ones are functional?’ and ‘what are they doing?’

This is the classic setup for a paradigm shaft. What you do is create a false history of a field and then reveal how your ground-breaking work has shattered the long-standing paradigm. In this case, the false history is that the standard view among scientists was that ALL noncoding RNAs were junk. That's nonsense. It means that these old scientists must have dismissed ribosomal RNA and tRNA back in the 1960s. But even if you grant that those were exceptions, it means that they knew nothing about Sidney Altman's work on RNAse P (Nobel Prize, 1989), or 7SL RNA (Alu elements), or the RNA components of spliceosomes (snRNAs), or PiWiRNAs, or snoRNAs, or microRNAs, or a host of regulatory RNAs that have been known for decades.

Knowledgeable scientists knew full well that there are many functional noncoding RNAS and that includes some that are called lncRNAs. As the editorial says, these knowledgeable scientists are warning about attributing function to all transcripts without evidence. In other words, many of the transcripts found in human cells could be junk RNA in spite of the fact that there are also many functional nonciding RNAs.

So, Tim Mercer is correct, the debate is over which ncRNAs are functional and that's the same debate that's been going on for 50 years. Move along folks, nothing to see here.

The author isn't going to let this go. She decides to interview John Mattick, of all people, to get a "proper" perspective on the field. (Tim Mercer is a former student of Mattick's.) Unfortunately, that perspective contains no information on how many functional ncRNAs are present and on what percentage of the genome their genes occupy. It's gonna take several hundred thousand lncRNA genes to make a significant impact on the amount of junk DNA but nobody wants to say that. With John Mattick you get a twofer: a false history (paradigm strawman) plus no evidence that your discoveries are truly revolutionary.

Nature Methods should be ashamed, not for presenting the views of John Mattick—that's perfectly legitimate—but for not putting them in context and presenting the other side of the controversy. Surely at this point in time (2022) we should all know that Mattick's views are on the fringe and most transcripts really are junk RNA?

Monday, October 17, 2022

University press releases are a major source of science misinformation

Here's an example of a press release that distorts science by promoting incorrect information that is not found in the actual publication.

The problems with press releases are well-known but nobody is doing anything about it. I really like the discussion in Stuart Ritchie's recent (2020) book where he begins with the famous "arsenic affair" in 2010. Sandwalk readers will recall that this started with a press conference by NASA announcing that arsenic replaces phosphorus in the DNA of some bacteria. The announcement was treated with contempt by the blogosphere and eventually the claim was discproved by Rosie Redfield who showed that the experiment was flawed [The Arsenic Affair: No Arsenic in DNA!].

This was a case where the science was wrong and NASA should have known before it called a press conference. Ritchie goes on to document many cases where press releases have distorted the science in the actual publication. He doesn't mention the most egregious example, the ENCODE publicity campaign that successfully convinced most scientists that junk DNA was dead [The 10th anniversary of the ENCODE publicity campaign fiasco].

I like what he says about "churnalism" ...

In an age of 'churnalism', where time-pressed journalists often simply repeat the content of press releases in their articles (science news reports are often worded vitrually identically to a press release), scientists have a great deal of power—and a great deal of responsibility. The constraints of peer review, lax as they might be, aren't present at all when engaging with the media, and scientists' biases about the importance of their results can emerge unchecked. Frustratingly, once the hype bubble has been inflated by a press release, it's difficult to burst.

Press releases of all sorts are failing us but university press releases are the most disappointing because we expect universities to be credible sources of information. It's obvious that scientists have to accept the blame for deliberately distorting their findings but surely the information offices at universities are also at fault? I once suggested that every press release has to include a statement, signed by the scientists, saying that the press release accurately reports the results and conclusions that are in the published article and does not contain any additional information or speculation that has not passed peer review.

Let's look at a recent example where the scientists would not have been able to truthfully sign such a statement.

A group of scientists based largely at The University of Sheffield in Sheffield (UK) recently published a paper in Nature on DNA damage in the human genome. They noted that such damage occurs preferentially at promoters and enhancers and is associated with demethylation and transcription activation. They presented evidence that the genome can be partially protected by a protein called "NuMA." I'll show you the abstract below but for now that's all you need to know.

The University of Sheffield decided to promote itself by issuing a press release: Breaks in ‘junk’ DNA give scientists new insight into neurological disorders. This title is a bit of a surprise since the paper only talks about breaks in enhancers and promoters and the word "junk" doesn't appear anywhere in the published report in Nature.

The first paragraph of the press release isn' very helpful.

‘Junk’ DNA could unlock new treatments for neurological disorders as scientists discover how its breaks and repairs affect our protection against neurological disease.

What could this mean? Surely they don't mean to imply that enhancers and promoters are "junk DNA"? That would be really, really, stupid. The rest of the press release should explain what they mean.

The groundbreaking research from the University of Sheffield’s Neuroscience Institute and Healthy Lifespan Institute gives important new insights into so-called junk DNA—or DNA previously thought to be non-essential to the coding of our genome—and how it impacts on neurological disorders such as Motor Neurone Disease (MND) and Alzheimer’s.

Until now, the body’s repair of junk DNA, which can make up 98 per cent of DNA, has been largely overlooked by scientists, but the new study published in Nature found it is much more vulnerable to breaks from oxidative genomic damage than previously thought. This has vital implications on the development of neurological disorders.

Oops! Apparently, they really are that stupid. The scientists who did this work seem to think that 98% of our genome is junk and that includes all the regulatory sequences. It seems like they are completely unaware of decades of work on discovering the function of these regulatory sequences. According The University of Sheffield, these regulatory sequences have been "largely overlooked by scientists." That will come as a big surprise to many of my colleagues who worked on gene regulation in the 1980s and in all the decades since then. It will probably also be a surprise to biochemistry and molecular biology undergraduates at Sheffield—at least I hope it will be a surprise.

Professor Sherif El-Khamisy, Chair in Molecular Medicine at the University of Sheffield, Co-founder and Deputy Director of the Healthy Lifespan Institute, said: “Until now the repair of what people thought is junk DNA has been mostly overlooked, but our study has shown it may have vital implications on the onset and progression of neurological disease."

I wonder if Professor Sherif El-Khamisy can name a single credible scientist who thinks that regulatory sequences are junk DNA?

There's no excuse for propagating this kind of misinformation about junk DNA. It's completely unnecessary and serves only to discredit the university and its scientists.

Ray, S., Abugable, A.A., Parker, J., Liversidge, K., Palminha, N.M., Liao, C., Acosta-Martin, A.E., Souza, C.D.S., Jurga, M., Sudbery, I. and El-Khamisy, S.F. (2022) A mechanism for oxidative damage repair at gene regulatory elements. Nature, 609:1038-1047. doi:[doi: 10.1038/s41586-022-05217-8]

Oxidative genome damage is an unavoidable consequence of cellular metabolism. It arises at gene regulatory elements by epigenetic demethylation during transcriptional activation1,2. Here we show that promoters are protected from oxidative damage via a process mediated by the nuclear mitotic apparatus protein NuMA (also known as NUMA1). NuMA exhibits genomic occupancy approximately 100 bp around transcription start sites. It binds the initiating form of RNA polymerase II, pause-release factors and single-strand break repair (SSBR) components such as TDP1. The binding is increased on chromatin following oxidative damage, and TDP1 enrichment at damaged chromatin is facilitated by NuMA. Depletion of NuMA increases oxidative damage at promoters. NuMA promotes transcription by limiting the polyADP-ribosylation of RNA polymerase II, increasing its availability and release from pausing at promoters. Metabolic labelling of nascent RNA identifies genes that depend on NuMA for transcription including immediate–early response genes. Complementation of NuMA-deficient cells with a mutant that mediates binding to SSBR, or a mitotic separation-of-function mutant, restores SSBR defects. These findings underscore the importance of oxidative DNA damage repair at gene regulatory elements and describe a process that fulfils this function.

Thursday, October 13, 2022

Macroevolution

(This is a copy of an essay that I published in 2006. I made some minor revisions to remove outdated context.)

Overheard at breakfast on the final day of a recent scientific meeting: "Do you believe in macroevolution?" Came the rely: "Well, it depends on how you define it."

Roger Lewin (1980)There is no difference between micro- and macroevolution except that genes between species usually diverge, while genes within species usually combine. The same processes that cause within-species evolution are responsible for above-species evolution.

John Wilkins

The minimalist definition of evolution is a change in the hereditary characteristics of a population over the course of many generations. This is a definition that helps us distinguish between changes that are not evolution and changes that meet the minimum criteria. The definition comes from the field of population genetics developed in the early part of the last century. The modern theory of evolution owes much to population genetics and our understanding of how genes work. But is that all there is to evolution?

The central question of the Chicago conference was whether the mechanisms underlying microevolution can be extrapolated to explain the phenomena of macroevolution. At the risk of doing violence to the positions of some of the people at the meeting, the answer can be given as a clear, No.Roger Lewin (1980)

No. There's also common descent—the idea that all life has evolved from primitive species over billions of years. Common descent is about the history of life. In this essay I'll describe the main features of how life evolved but keep in mind that this history is a unique event that is accidental, contingent, quirky, and unpredictable. I'll try and point out the most important controversies about common descent.

The complete modern theory of evolution encompasses much more than changes in the genetics of a population. It includes ideas about the causes of speciation, long-term trends, and mass extinctions. This is the domain of macroevolution—loosely defined as evolution above the species level. The kind of evolution that focuses on genes in a population is usually called microevolution.

As a biochemist and a molecular biologist, I tend to view evolution from a molecular perspective. My main interest is molecular evolution and the analysis of sequences of proteins and nucleic acids. One of the goals in writing this essay is to explain this aspect of evolution to the best of my limited ability. However, another important goal is to show how molecular evolution integrates into the bigger picture of evolution as described by all other evolutionary biologists, including paleontologists. When dealing with macroevolution this is very much a learning experience for me since I'm not an expert. Please bear with me while we explore these ideas.

It's difficult to define macroevolution because it's a field of study and not a process. Mark Ridley has one of the best definitions I've seen ...

Macroevolution means evolution on the grand scale, and it is mainly studied in the fossil record. It is contrasted with microevolution, the study of evolution over short time periods., such as that of a human lifetime or less. Microevolution therefore refers to changes in gene frequency within a population .... Macroevolutionary events are more likely to take millions, probably tens of millions of years. Macroevolution refers to things like the trends in horse evolution described by Simpson, and occurring over tens of millions of years, or the origin of major groups, or mass extinctions, or the Cambrian explosion described by Conway Morris. Speciation is the traditional dividing line between micro- and macroevolution.

Mark Ridley (1997) p. 227

When we talk about macroevolution we're talking about studies of the history of life on Earth. This takes in all the events that affect the actual historical lineages leading up to today's species. Jeffrey S. Levinton makes this point in his description of the field of macroevolution and it's worth quoting what he says in his book Genetics, Paleontology, and Macroevolution.

Macroevolution must be a field that embraces the ecological theater, including the range of time scales of the ecologist, to the sweeping historical changes available only to paleontological study. It must include the peculiarities of history, which must have had singular effects on the directions that the composition of the world's biota took (e.g., the splitting of continents, the establishment of land and oceanic isthmuses). It must take the entire network of phylogenetic relationships and impose a framework of genetic relationships and appearances of character changes. Then the nature of evolutionary directions and the qualitative transformation of ancestor to descendant over major taxonomic distances must be explained.

Jeffrey S. Levinton (2001) p.6

Levinton then goes on to draw a parallel between microevolution and macroevolution on the one hand, and physics and astronomy on the other. He points out that the structure and history of the known universe has to be consistent with modern physics, but that's not sufficient. He gives the big bang as an example of a cosmological hypothesis that doesn't derive directly from fundamental physics. I think this analogy is insightful. Astronomers study the life and death of stars and the interactions of galaxies. Some of them are interested in the formation of planetary systems, especially the unique origin of our own solar system. Explanations of these "macro" phenomena depend on the correctness of the underlying "micro" physics phenomena (e.g., gravity, relativity) but there's more to the field of astronomy than that.

Levinton continues ....

In conclusion, then, macroevolutionary processes are underlain by microevolutionary phenomena and are compatible with microevolutionary theories, but macroevolutionary studies require the formulation of autonomous hypotheses and models (which must be tested using macroevolutionary evidence). In this (epistemologically) very important sense, macroevolution is decoupled from microevolution: macroevolution is an autonomous field of evolutionary study.Does the evolutionary biologist differ very much from this scheme of inference? A set of organisms exists today in a partially measurable state of spatial, morphological, and chemical relationships. We have a set of physical and biological laws that might be used to construct predictions about the outcome of the evolutionary process. But, as we all know, we are not very successful, except at solving problems at small scales. We have plausible explanations for the reason why moths living in industrialized areas are rich in dark pigment, but we don't know whether or why life arose more than once or why some groups became extinct (e.g., the dinosaurs) whereas others managed to survive (e.g., horseshoe crabs). Either our laws are inadequate and we have not described the available evidence properly or no such laws can be devised to predict uniquely what should have happened in the history of life. For better or worse, macroevolutionary biology is as much historical as is astronomy, perhaps with looser laws and more diverse objectives....

Indeed, the most profound problem in the study of evolution is to understand how poorly repeatable historical events (e.g., the trapping of an endemic radiation in a lake that dries up) can be distinguished from lawlike repeatable processes. A law that states 'an endemic radiation will become extinct if its structural habitat disappears' has no force because it maps to the singularity of a historical event.

Jeffrey S. Levinton (2001) p.6-7

Francisco J. Ayala (1983)

I think it's important to appreciate what macroevolutionary biologists are saying. Most of these scientists are paleontologists and they think of their area of study as an interdisciplinary field that combines geology and biology. According to them, there's an important difference between evolutionary theory and the real history of life. The actual history has to be consistent with modern evolutionary theory (it is) but the unique sequence of historical events doesn't follow directly from application of evolutionary theory. Biological mechanisms such as natural selection and random genetic drift are part of a much larger picture that includes moving continents, asteroid impacts, ice ages, contingency, etc. The field of macroevolution addresses these big picture issues.

Clearly, there are some evolutionary biologists who are only interested in macroevolution. They don't care about microevolution. This is perfectly understandable since they are usually looking at events that take place on a scale of millions of years. They want to understand why some species survive while others perish and why there are some long-term trends in the history of life. (Examples of such trends are the loss of toes during the evolution of horses, the development of elaborate flowers during the evolution of vascular plants, and the tendency of diverse species, such as the marsupial Tasmanian wolf and the common placental wolf, to converge on a similar body plan.)

Nobody denies that macroevolutionary processes involve the fundamental mechanisms of natural selection and random genetic drift, but these microevolutionary processes are not sufficient, by themselves, to explain the history of life. That's why, in the domain of macroevolution, we encounter theories about species sorting and tracking, species selection, and punctuated equilibria.

Micro- and macroevolution are thus different levels of analysis of the same phenomenon: evolution. Macroevolution cannot solely be reduced to microevolution because it encompasses so many other phenomena: adaptive radiation, for example, cannot be reduced only to natural selection, though natural selection helps bring it about.Eugenie C. Scott (2004)

As I mentioned earlier, most of macroevolutionary theory is intimately connected with the observed fossil record and, in this sense, it is much more historical than population genetics and evolution within a species. Macroevolution, as a field of study, is the turf of paleontologists and much of the debate about a higher level of evolution (above species and populations) is motivated by the desire of paleontologists to be accepted at the high table of evolutionary theory. It's worth recalling that during the last part of the twentieth century evolutionary theorizing was dominated by population geneticists. Their perspective was described by John Maynard Smith, "... the attitude of population geneticists to any paleontologist rash enough to offer a contribution to evolutionary theory has been to tell him to go away and find another fossil, and not to bother the grownups." (Maynard Smith, 1984)

The distinction between microevolution and macroevolution is often exaggerated, especially by the anti-science crowd. Creationists have gleefully exploited the distinction in order to legitimate their position in the light of clear and obvious examples of evolution that they can't ignore. They claim they can accept microevolution, but they reject macroevolution.

In the real world—the one inhabited by rational human beings—the difference between macroevolution and microevolution is basically a difference in emphasis and level. Some evolutionary biologists are interested in species, trends, and the big picture of evolution, while others are more interested in the mechanics of the underlying mechanisms.

Speciation is critical to conserving the results of both natural selection and genetic drift. Speciation is obviously central to the fate of genetic variation, and a major shaper of patterns of evolutionary change through evolutionary time. It is as if Darwinians—neo- and ulra- most certainly included—care only for the process generating change, and not about its ultimate fate in geological time.Niles Eldredge (1995)

The Creationists would have us believe there is some magical barrier separating selection and drift within a species from the evolution of new species and new characteristics. Not only is this imagined barrier invisible to most scientists but, in addition, there is abundant evidence that no such barrier exists. We have numerous examples that show how diverse species are connected by a long series of genetic changes. This is why many scientists claim that macroevoluton is just lots of microevolution over a long period of time.

But wait a minute. I just said that many scientists think of macroevolution as simply a scaled-up version of microevolution, but a few paragraphs ago I said there's more to the theory of evolution than just changes in the frequency of alleles within a population. Don't these statements conflict? Yes, they do ... and therein lies a problem.

When the principle tenets of the Modern Synthesis were being worked out in the 1940's, one of the fundamental conclusions was that macroevolution could be explained by changes in the frequency of alleles within a population due, mostly, to natural selection. This gave rise to the commonly accepted notion that macroevolution is just a lot of microevolution. Let's refer to this as the sufficiency of microevolution argument.

At the time of the synthesis, there were several other explanations that attempted to decouple macroevolution from microevolution. One of these was saltation, or the idea that macroevolution was driven by large-scale mutations (macromutations) leading to the formation of new species. This is the famous "hopeful monster" theory of Goldschmidt. Another decoupling hypothesis was called orthogenesis, or the idea that there is some intrinsic driving force that directs evolution along certain pathways. Some macroevolutionary trends, such as the increase in the size of horses, were thought to be the result of this intrinsic force.

Both of these ideas about macroevolutionary change (saltation and orthogensis) had support from a number of evolutionary biologists. Both were strongly opposed by the group of scientists that produced the Modern Synthesis. One of the key players was the paleontologist George Gaylord Simpson whose books Tempo and Mode in Evolution (1944) and The Major Features of Evolution (1953) attempted to combine paleontology and population genetics. "Tempo" is often praised by evolutionary biologists and many of our classic examples of evolution, such as the bushiness of the horse tree, come from that book. It's influence on paleontologists was profound because it upset the traditional view that macroevolution and the newfangled genetics had nothing in common.

Just as mutation and drift introduce a strong random component into the process of adaptation, mass extinctions introduce chance into the process of diversification. This is because mass extinctions are a sampling process analogous to genetic drift. Instead of sampling allele frequencies, mass extinctions samples species and lineages. ... The punchline? Chance plays a large role in the processes responsible for adaptation and diversity.Freeman and Herron (1998)

We see, in context, that the blurring of the distinction between macroevolution and microevolution was part of a counter-attack on the now discredited ideas of saltation and orthogenesis. As usual, when pressing the attack against objectionable ideas, there's a tendency to overrun the objective and inflict collateral damage. In this case, the attack on orthogenesis and the old version of saltation was justified since neither of these ideas offer viable alternatives to natural selection and drift as mechanisms of evolution. Unfortunately, Simpson's attack was so successful that a generation of scientists grew up thinking that macroevolution could be entirely explained by microevolutionary processes. That's why we still see this position being advocated today and that's why many biology textbooks promote the sufficiency of microevolution argument. Gould argues—successfully, in my opinion—that the sufficiency of microevolution became dogma during the hardening of the synthesis in the 1950-'s and 1960's. It was part of an emphasis on the individual as the only real unit of selection.

However, from the beginning of the Modern Synthesis there were other evolutionary biologists who wanted to decouple macroevolution and microevolution—not because they believed in the false doctrines of saltation and orthogenesis, but because they knew of higher level processes that went beyond microevolution. One of these was Ernst Mayr. In his essay "Does Microevolution Explain Macroevolution," Mayr says ...

Among all the claims made during the evolutionary synthesis, perhaps the one that found least acceptance was the assertion that all phenomena of macroevolution can be ‘reduced to,' that is, explained by, microevolutionary genetic processes. Not surprisingly, this claim was usually supported by geneticists but was widely rejected by the very biologists who dealt with macroevolution, the morphologists and paleontologists. Many of them insisted that there is more or less complete discontinuity between the processes at the two levels—that what happens at the species level is entirely different from what happens at the level of the higher categories. Now, 50 years later the controversy remains undecided.

Ernst Mayr (1988) p.402

Mayr goes on to make several points about the difference between macroevolution and microevolution. In particular, he emphasizes that macroevolution is concerned with phenotypes and not genotypes, "In this respect, indeed, macroevolution as a field of study is completely decoupled from microevolution." (ibid p. 403). This statement reiterates an important point, namely that macroevolution is a "field of study" and, as such, its focus differs from that of other fields of study such as molecular evolution.

If you think of macroevolution as a field of study rather than a process, then it doesn't make much sense to say that macroevolution can be explained by the process of changing alleles within a population. This would be like saying the entire field of paleontology can be explained by microevolution. This is the point about the meaning of the term "macroevolution" that is so often missed by those who dismiss it as just a bunch of microevolution.

The orthodox believers in the hardened synthesis feel threatened by macroevolution since it implies a kind of evolution that goes beyond the natural selection of individuals within a population. The extreme version of this view is called adaptationism and the believers are called Ultra-Darwinians by their critics. This isn't the place to debate adaptationism: for now, let's just assume that the sufficiency of microevolution argument is related to the pluralist-adaptationist controversy and see how our concept of macroevolution as a field of study relates to the issue. Niles Eldredge describes it like this ...

The very term macroevolution is enough to make an ultra-Darwinian snarl. Macroevolution is counterpoised with microevolution—generation by generation selection- mediated change in gene frequencies within populations. The debate is over the question, Are conventional Darwinian microevolutionary processes sufficient to explain the entire history of life? To ultra-Darwinians, the very term macroevolution suggests that the answer is automatically no. To them, macroevolution implies the action of processes—even genetic processes—that are as yet unknown but must be imagined to yield a satisfactory explanation of the history of life.

But macroevolution need not carry such heavy conceptual baggage. In its most basic usage, it simply means evolution on a large-scale. In particular, to some biologists, it suggests the origin of major groups - such as the origin and radiation of mammals, or the derivation of whales and bats from terrestrial mammalian ancestors. Such sorts of events may or may not demand additional theory for their explanation. Traditional Darwinian explanation, of course, insists not.

Niles Eldredge (1995) p. 126-127

Eldredge sees macroevolution as a field of study that's mostly concerned with evolution on a large scale. Since he's a paleontologist, it's likely that, for him, macroevolution is the study of evolution based on the fossil record. Eldredge is quite comfortable with the idea that one of the underlying causes of evolution can be natural selection—this includes many changes seen over the course of millions of years. In other words, there is no conflict between microevolution and macroevolution in the sense that microevolution stops and is replaced by macroevolution above the level of species. But there is a conflict in the sense that Eldredge, and many other evolutionary biologists, do not buy the sufficiency of microevolution argument. They believe there are additional theories, and mechanisms, needed to explain macroevolution. Gould says it best ....

We do not advance some special theory for long times and large transitions, fundamentally opposed to the processes of microevolution. Rather, we maintain that nature is organized hierarchically and that no smooth continuum leads across levels. We may attain a unified theory of process, but the processes work differently at different levels and we cannot extrapolate from one level to encompass all events at the next. I believe, in fact, that ... speciation by splitting guarantees that macroevolution must be studied at its own level. ... [S]election among species—not an extrapolation of changes in gene frequencies within populations—may be the motor of macroevolutionary trends. If macroevolution is, as I believe, mainly a story of the differential success of certain kinds of species and, if most species change little in the phyletic mode during the course of their existence, then microevolutionary change within populations is not the stuff (by extrapolation) of major transformations.

Stephen Jay Gould (1980b) p. 170

Naturalists such as Ernst Mayr and paleontologists such as Gould and Eldredge have all argued convincingly that speciation is an important part of evolution. Since speciation is not a direct consequence of changes in the frequencies of alleles in a population, it follows that microevolution is not sufficient to explain all of evolution. Gould and Eldredge (and others) go even further to argue that there are processes such as species sorting that can only take place above the species level. This means there are evolutionary theories that only apply in the domain of macroevolution.

The idea that there's much more to evolution than genes and population genetics was a favorite theme of Stephen Jay Gould. He advocated a pluralist, hierarchical approach to evolution and his last book The Structure of Evolutionary Theory emphasized macroevolutionary theory—although he often avoided using this term. The Structure of Evolutionary Theory is a huge book that has become required reading for anyone interested in evolution. Remarkably, there's hardly anything in the book about population genetics, molecular evolution, and microevolution as popularly defined. What better way of illustrating that macroevolution must be taken seriously!

Macroevolutionary theory tries to identify patterns and trends that help us understand the big picture. In some cases, the macroevolution biologists have recognized generalities (theories & hypotheses) that only apply to higher level processes. Punctuated equilibria and species sorting are examples of such higher level phenomena. The possible repeatedness of mass extinctions might be another.

Remember that macroevolution should not be contrasted with microevolution because macroevolution deals with history. Microevolution and macroevolution are not competing explanations of the history of life any more than astronomy and physics compete for the correct explanation of the history of the known universe. Both types of explanation are required.

I think species sorting is the easiest higher level phenomena to describe. It illustrates a mechanism that is clearly distinct from changes in the frequencies of alleles within a population. In this sense, it will help explain why microevolution isn't a sufficient explanation for the evolution of life. Of course, one needs to emphasize that macroevolution must be consistent with microevolution.

I have championed contingency, and will continue to do so, because its large realm and legitimate claims have been so poorly attended by evolutionary scientists who cannot discern the beat of this different drummer while their brains and ears remain tuned to only the sounds of general theory.Stephen Jay Gould (2002)

If we could track a single lineage through time, say from a single-cell protist to Homo sapiens, then we would see a long series of mutations and fixations as each ancestral population evolved. It might look as though the entire history could be accounted for by microevolutionary processes. This is an illusion because the track of the single lineage ignores all of the branching and all of the other species that lived and died along the way. That track would not explain why Neanderthals became extinct and Cro-Magnon survived. It would not explain why modern humans arose in Africa. It would not tell us why placental mammals became more successful than the dinosaurs. It would not explain why humans don't have wings and can't breathe underwater. It doesn't tell us whether replaying the tape of life will automatically lead to humans. All of those things are part of the domain of macroevolution and microevolution isn't sufficient to help us understand them.

Tuesday, October 11, 2022

On reasoning with creationists

I've been trying to reason with creationists for more than 30 years, beginning with debates on talk.origins back in the early 1990s. Sometimes we make a little progress but most of the time it's very frustrating.

Over the years, we've encountered a few outstanding examples of creationists whose "reasoning" abilities defy explanation. One of he most famous is Otangelo Grasso - his ability to misunderstand and misconstrue science is legendary. He is one of only a small number of people who are banned from Sandwalk.

Here's an example of his unique unreasoning abiltiies.

Trying to educate a creationist (Otangelo Grasso)

I bring this up because he recently posted an artilce on the Uncommon Descent blog and you just have to read it if you want a good laugh. It shows you that 30 years of attempting to teach science to creationists isn't nearly long enough.

Otangelo Grasso on the difficulties of reasoning with atheists

Monday, October 03, 2022

Evolution by chance

Can natural selection occur by chance or accident? No, with qualifications. Can evolution occur by chance or accident? Yes, definitely.

While tidying up my office I came across an anthology of articles by Richard Dawkins. It included a 2009 review of Jerry Coyne's book Why Evolution Is True (2009) and one of Richard's comments caught my eye because it illustrates the difference between the Dawkins' view of evolution and the current mainstream view that was described by Jerry in his book.

I can illustrate this difference by first quoting from Jerry Coyne's book.

This brings up the most widespread misunderstanding about Darwinism: the idea that, in evolution, "everything happens by chance" (also stated as "everything happens by accident"). This common claim is flatly wrong. No evolutionist—and certainly not Darwin—ever argued that natural selection is based on chance ....

True, the raw materials for evolution—the variations between individuals—are indeed produced by chance mutations. These mutations occur willy-nilly, regardless of whether they are good or bad for the individual. But it is the filitering of that variation by natural selection that produces natural selection, and natural selection is manifestly not random. (p. 119)

It's extremely important to notice that Coyne is referring to NATURAL SELECTION (or Dawinism) in this passage. Natural selection is not random or accidental, according to Coyne. This passage is followed just a few pages later by a section titled "Evolution Without Selection."

Let's take a brief digression here, because it's important to appreciate that natural selection isn't the only process of evolutionary change. Most biologists define evolution as a change in the proportion of alleles (different forms of a gene) in the population.

[Coyne then describes an example of random genetic drift and continues ...] Both drift and selection produce the genetic change that we recognize as evolution. But there's an important difference. Drift is a random process, while selection is the antithesis of randomness. Genetic drift can change the frequencies of alleles regardless of how useful they are to their carrier. Selection, on the other hand, always gets rid of harmful alleles and raises the frequencies of beneficial ones. (pp. 122-123)

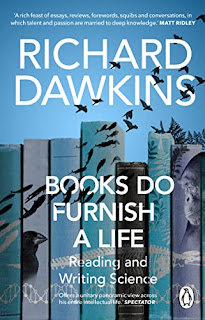

Now let's look at Richard Dawkins' review of Coyne's book as published in the Times Literary Supplement in 2009 and reprinted in Books Do Furnish a Life (2021). I picked out an interesting passage from that review in order to illustrate a point.

Coyne is right to identify the most widespread misunderstanding about Darwinism as 'the idea that, in evolution, 'everything happens by chance' ... This common claim is flatly wrong.' Not only is it flatly wrong, it is obviously wrong, transparently wrong, even to the meanest intelligence (a phrase that has me actively restraining myself). If evolution worked by chance, it obviously couldn't work at all. (p. 427)

That last sentence is jarring to many scientists, including me. I think that the Dawkins' statement is 'obviously wrong' and 'transparently wrong' because, as Coyne pointed out, evolution by random genetic drift can occur by chance. [Let's not quibble about the meanings of 'random' and 'chance." That's a red herring in this context.] Clearly, evolution can work by chance so why does Dawkins say it can't?

It's not because Dawkins is unaware of random genetic drift and Neutral Theory. The explanation (I think) is that Dawkins restricts his definition of evolution to evolution by natural selection. From his perspective, the fixation of alleles by random genetic drift doesn't count as real evolution because it doesn't produce adaptations. That's the view that he described in The Extended Phenotype back in 1982 and the view that he has implicitly supported over the past few decades [Richard Dawkins' View of Random Genetic Drift].

This is one of the reasons why we refer to Dawkins as an adaptationist and it's one of the reasons why so many of today's evolutionary biologists—especially those who study evolution at the molecular level—reject the Dawkins' view of evolution in favor of a more pluralistic approach.

Note: I wrote an earlier version of this post in 2009 [Dawkins on Chance] and I wrote a long essay on Evolution by Accident where I describe many other examples of evolution by chance.

Saturday, September 10, 2022

Wikipedia articles: Quality and importance rankings

Wikipedia has a way of assessing the quality of articles that have been posted and edited. The rankings are somewhat confusing and it’s hard to find the complete list of quality categories so I’m putting a link to Wikipedia: Content assessment here.

There are six categories ranging from FA (featured article) to C.

Monday, September 05, 2022

The 10th anniversary of the ENCODE publicity campaign fiasco

On Sept. 5, 2012 ENCODE researchers, in collaboration with the science journal Nature, launched a massive publicity campaign to convince the world that junk DNA was dead. We are still dealing with the fallout from that disaster.

The Encyclopedia of DNA Elements (ENCODE) was originally set up to discover all of the functional elements in the human genome. They carried out a massive number of experiments involving a huge group of researchers from many different countries. The results of this work were published in a series of papers in the September 6th, 2012 issue of Nature. (The papers appeared on Sept. 5th.)

Sunday, September 04, 2022

Wikipedia: the ENCODE article

The ENCODE article on Wikipedia is a pretty good example of how to write a science article. Unfortunately, there are a few issues that will be very difficult to fix.

When Wikipedia was formed twenty years ago, there were many people who were skeptical about the concept of a free crowdsourced encyclopedia. Most people understood that a reliable source of information was needed for the internet because the traditional encyclopedias were too expensive, but could it be done by relying on volunteers to write articles that could be trusted?

The answer is mostly “yes” although that comes with some qualifications. Many science articles are not good; they contain inaccurate and misleading information and often don’t represent the scientific consensus. They also tend to be disjointed and unreadable. On the other hand, many non-science articles are at least as good, and often better, than anything in the traditional encyclopedias (eg. Battle of Waterloo; Toronto, Ontario; The Beach Boys).

By 2008, Wikipedia had expanded enormously and the quality of articles was being compared favorably to those of Encyclopedia Britannica, which had been forced to go online to compete. However, this comparison is a bit unfair since it downplays science articles.

Monday, August 29, 2022

The creationist view of junk DNA

Here's a recent video podcast (Aug. 23, 1022) from the Institute for Creation Research (sic). It features an interview with Dr. Jeff Tomkins of the ICR where he explains the history of junk DNA and why scientists no longer believe in junk DNA.

Most Sandwalk readers will recognize all the lies and distortions but here's the problem: I suspect that the majority of biologists would pretty much agree with the creationist interpretation. They also believe that junk DNA has been refuted and most of our genome is functional.

That's very sad.

Friday, August 26, 2022

ENCODE and their current definition of "function"

ENCODE has mostly abandoned it's definition of function based on biochemical activity and replaced it with "candidate" function or "likely" function, but the message isn't getting out.

Back in 2012, the ENCODE Consortium announced that 80% of the human genome was functional and junk DNA was dead [What did the ENCODE Consortium say in 2012?]. This claim was widely disputed, causing the ENCODE Consortium leaders to back down in 2014 and restate their goal (Kellis et al. 2014). The new goal is merely to map all the potential functional elements.

... the Encyclopedia of DNA Elements Project [ENCODE] was launched to contribute maps of RNA transcripts, transcriptional regulator binding sites, and chromatin states in many cell types.

The new goal was repeated when the ENCODE III results were published in 2020, although you had to read carefully to recognize that they were no longer claiming to identify functional elements in the genome and they were raising no objections to junk DNA [ENCODE 3: A lesson in obfuscation and opaqueness].