There is no such thing as “junk DNA.” Indeed, a suite of discoveries made over the past few decades have put to rest this misnomer and have identified many important roles that so-called junk DNA provides to both genome structure and function (this special issue; Biémont and Vieira 2006; Jeck et al. 2013; Elbarbary et al. 2016; Akera et al. 2017; Chen and Yang 2017; Chuong et al. 2017). Nevertheless, given the historical focus on coding regions of the genome, our understanding of the biological function of non-coding regions (e.g., repetitive DNA, transposable elements) remains somewhat limited, and therefore, all those enigmatic and poorly studied regions of the genome that were once identified as junk are instead best viewed as genomic “dark matter.”

More Recent Comments

Thursday, April 05, 2018

Peter Larsen: "There is no such thing as 'junk DNA'"

Thursday, February 29, 2024

Nils Walter disputes junk DNA: (3) Defining 'gene' and 'function'

I'm discussing a recent paper published by Nils Walter (Walter, 2024). He is trying to explain the conflict between proponents of junk DNA and their opponents. His main focus is building a case for large numbers of non-coding genes.

This is the third post in the series. The first one outlines the issues that led to the current paper and the second one describes Walter's view of a paradigm shift.

-Nils Walter disputes junk DNA: (1) The surprise

-Nils Walter disputes junk DNA: (2) The paradigm shaft

Any serious debate requires some definitions and the debate over junk DNA is no exception. It's important that everyone is on the same page when using specific words and phrases. Nils Walter recognizes this so he begins his paper with a section called "Starting with the basics: Defining 'function' and 'gene'."

Thursday, August 11, 2011

Junk & Jonathan: Part 9—Chapter 6

This is part 9 of my review of The Myth of Junk DNA. For a list of other postings on this topic see the links below. For other postings on junk DNA check out the links in Genomes & Junk DNA in the "theme box" below or in the sidebar under "Themes."

This is part 9 of my review of The Myth of Junk DNA. For a list of other postings on this topic see the links below. For other postings on junk DNA check out the links in Genomes & Junk DNA in the "theme box" below or in the sidebar under "Themes." - Junk & Jonathan: Part 1—Getting the History Correct

- Junk & Jonathan: Part 2— What Did Biologists Really Say About Junk DNA?

- Junk & Jonathan: Part 3—The Preface: Preface

- Junk & Jonathan: Part 4—Chapter 1: The Controversy over Darwinian Evolution

- Junk & Jonathan: Part 5—Chapter 2: Junk DNA: The Last Icon of Evolution?

- Junk & Jonathan: Part 6—Chapter 3: Most DNA Is Transcribed into RNA

- Junk & Jonathan: Part 7—Chapter 4: Introns and the Splicing Code

- Junk & Jonathan: Part 8—Chapter 5: Pseudogenes—Not so Pseudo After All

The title of Chapter 6 is "Jumping Genes and Repetitive DNA." Wells describes transposons as jumping genes and includes them in the category of "Repetitive Non-Protein-Coding DNA." This category makes up 50% of the genome, according to Wells. The breakdown is as follows. LINES 21%; SINES 13%; retroviral-like elements 8%; simple sequence repeats 5%; and DNA-only transposons 3%. These percentages are similar to those published in a wide variety of textbooks and scientific papers. [What's in Your Genome?] [Junk in your Genome: LINEs] [Junk in Your Genome: SINES]

Many LINES and SINES are Functional

Most of the transposons in the human genome are fragments or otherwise mutated versions of the original mobile elements. This is a good reason for assigning them to the junk DNA category. They are a form of pseudogene. What this means is that half of our genome is composed of defective transposons that can't "jump." This is non-functional DNA, otherwise known as "junk."

This conclusion is unacceptable to Jonathan Wells. In order to prove that junk DNA is a myth he must show that most of this DNA has a function. He begins with a section called "Many LINES and SINEs are functional.

Theme

Genomes

& Junk DNAThe first evidence for functionality comes from the data showing that most of the genome is transcribed—the main theme of Chapter 3. If most of these defective transposons are transcribed then it seems likely that they have a function. But the evidence for widespread transcription is not widely accepted and one of the main criticisms is that it's extremely difficult to tell which repeated sequences are actually transcribed and which ones just happen to be very similar to a family member that is transcribed. We know that active LINES and SINES have to be transcribed at some time and we know that many defective transposons will still be transcribed even if they are non-functional. The question is not whether any transposons are transcribed, it's whether all (or most) of them are. The answer is almost certainly that very few are transcribed on a regular basis. The pervasive transcription reported in some papers is most likely accidental transcription at a very low level or artifact.1

Nishihara, H., Smit, A.F., and Okada, N. (2006) Functional noncoding sequences derived from SINEs in the mammalian genome. Genome Res. 16:864-874.The first "function" paper Wells mentions is Nishihara et al. (2006). These workers characterized a new class of SINES in the human genome. They consist of a hybrid of 5S RNA and a tRNA (~300 bp). These SINES are found in most vertebrates (fish, amphibians, birds, mammals). There are about 1000 of these SINEs in the human genome and in 105 of them there seems to be more sequence conservation in the central portion of the sequence than expected from junk DNA. For example, the overall sequence similarity between human and zebrafish is about 59% in a stretch of 338 nucleotides. (The authors refer to these as regions that are "under strong selective constraint." That's a bit exaggerated.)

This section contains three other examples of sequence similarities in a small class of transposons. These are the only examples where he makes the case for widespread functionality of defective transposons.

Wells is happy to accept the arguments that sequence similarity is evidence of homology and also of function.2 Several possible functions were ruled out in these studies but it's possible that they have some undiscovered function that results in moderate sequence conservation. If this turns out to be true it would mean that 0.01% of the genome should be moved from the "junk" category to the "functional" category.

Some Specific Functions of LINES and SINES

Wells then proceeds to a discussion of papers that demonstrate a function for individual transposon fragments. Before we look at those studies, it's worth keeping the big picture in mind. A typical eukaryotic genome has a million defective transposons and a dozen or so functional transposons that are still capable of jumping to new locations. If you examine the genomes of a dozen or so eukaryotes you can expect to find some examples where these bits of DNA have been co-opted to perform some new function. If you collect together all these examples from many different species then it looks like an impressive array of functional transposon sequences but impressions can be deceptive. It's still true that 99.9% of defective transposons are junk.

This section of the book consists of four pages of specific examples of presumed functional transposon-like sequences from mouse, rat, human, hamster, silkworm, fruit fly, and Arabidopsis (plant). There are 54 references to the scientific literature. Wells makes no attempt to asses the reliability of these studies, nor does he indicate whether the claims have been independently confirmed by other labs.

It's a typical ploy of creationists to focus on specific examples of unusual adaptations and ignore the context. In this case, all those specific individual examples don't amount to a hill of beans when compared to the vast majority of junk in eukaryotic genomes.

However, in fairness it's not just Jonathan Wells and the creationists who fail to see the forest for the trees. There are many scientists who also think that the evidence quoted by Wells is conclusive proof that junk DNA is a myth. Wells has no trouble finding such scientists whose views are expressed in the scientific literature.

Walters, R.D., Kugel, J.A., and Goodrich, J.A. (2009) Critical Review (sic): InvAluable Junk: The Cellular Impact and Function of Alu and B2 RNAs. Life 61:831-837.Endogenous Retroviruses

Finding that Alu and B2 SINEs are transcribed, both as distinct RNA polymerase III transcripts and as part of RNA polymerase II transcripts, and that these SINE encoded RNAs indeed have biological functions has refuted the historical notion that SINEs are merely "junk DNA."

About 8% of your genome consists of endogenous retroviruses. Most of them are defective, usually because large parts of them have been deleted. Some are still capable of producing viable retrovirus, like HIV or hepatitis B. There are about 30,000 loci in your genome where endogenous retroviruses are located.

Endogenous retroviruses contain strong transcriptional promoters since they have to produce a lot of transcripts when they are induced. Some of these small promoter regions (called "LTRs") have become incorporated into the promoter regions of regular genes. What happened is that millions of years ago a retrovirus accidentally integrated into the genome near the 5′ end of a gene and when parts of the endogenous retrovirus were lost by deletion the remaining retroviral promoter region became active leading to enhanced transcription of the adjacent gene. Over time this piece of the retrovirus evolved to become an integral part of the regulation of the gene.

Wells describes several examples of such events in different mammals. He also describes a famous example where expression of a modified envelope protein from a defective endogenous retrovirus has taken on a role in the development of the mammalian placenta. Together, these examples of co-opted retroviral sequences account for about a dozen of the 30,000 copies in your genome. Naturally, this is assumed to be proof that none of the defective endogenous retroviruses are junk. That's the logic of creationists.

Francis Collins and Repetitive Elements

The last section of this chapter is pure rhetoric. Wells is upset because Francis Collins, a Christian, wrote the following in The Language of God (pages 135-136):

Even more compelling evidence for a common ancestor comes from the study of what are known as ancient repetitive elements (AREs). These arise from "jumping genes," which are capable of copying and inserting themselves in various other locations in the genome, usually without any functional consequences. Mammalian genomes are littered with such AREs, with roughly 45 percent of the human genome made up of such flotsam and jetsam. When one aligns sections of the human and mouse genomes, anchored by the appearance of gene counterparts that occur in the same order, one can usually also identify AREs in approximately the same location in these two genomes.Wells is upset because this is a double-barreled attack on intelligent design. Not only does Collins describe these sequences as junk but he also uses them as evidence of common ancestry. This is one of the reason why creationists like Wells maintain that junk DNA is used to support evolution.

Some of these may have been lost in one species or the other, but many of them remain in a position that is most consistent with their having arrived in the genome of a common mammalian ancestor, and having been carried along ever since. Of course, some might argue that these are actually functional elements placed there by the Creator for a good reason, and our discounting them as "junk DNA" just betrays our current level of ignorance. And indeed, some small fraction of them may play important regulatory roles. But certain examples severely strain the credulity of that explanation. The process of transposition often damages the jumping gene. There are AREs throughout the human and mouse genomes that were truncated when they landed, removing any possibility of their functioning. In many cases, one can identify a decapitated and utterly defunct ARE in parallel positions in the human and mouse genome.

Wells says,

Collins's argument rests on the assumption that those repetitive elements ... are nonfunctional. Yet their similar position in the human and mouse genomes could mean that they are performing some function in both. Given the rate at which functions are being discovered, Collins's assumption seems foolhardy, and his argument could easily collapse in the face of new scientific discoveries.As long as most of these repetitive elements remain non-functional they pose a serious problem for Intelligent Design Creationists. The IDiots have been wishing for evidence of function for 20 years but "wishing" isn't a very scientific argument. Given the rate at which functions are being discovered, it's going to be a long, long time before all those hundreds of thousands of repetitive elements are assigned a function (i.e. never!). The vast majority of them are junk and discovery of a few co-opted sequences isn't going to change that fact.

1. As a general rule, Wells "forgets" to mention when one of his "facts" is disputed in the scientific literature. Like most creationists, he is delighted to quote any scientific studies that confirm his bias but he is somewhat more reluctant to quote papers that don't. In this case—pervasive transcription—the criticisms in the scientific literature are so well-known that he is forced to discuss them in Chapter 9.

2. This is a bit strange coming from someone who doesn't believe in evolution but logic has never been a strong point is Wells' writing. Note that Wells does not accept the corollary argument that lack of conservation implies lack of function.

Friday, May 07, 2021

More misinformation about junk DNA: this time it's in American Scientist

Emily Mortola and Manyuan Long have just published an article in American Scientist about Turning Junk into Us: How Genes Are Born. The article contains a lot of misinformaton about junk DNA that I'll discuss below.

Emily Mortola is a freelance science writer who worked with Manyuan Long when she was an undergraduate (I think). Manyuan Long is the Edna K. Papazian Distinguished Service Professor of Ecology and Evolution in the Department of Ecology and Evolution at the University of Chicago. His main research interest is the origin of new genes. It's reasonable to suspect that he's an expert on genome structure and evolution.

The article is behind a paywall so most of you can't see anything more than the opening paragraphs so let's look at those first. The second sentence is ...

As we discovered in 2003 with the conclusion of the Human Genome Project, a monumental 13-year-long research effort to sequence the entire human genome, approximately 98.8 percent of our DNA was categorized as junk.

This is not correct. The paper on the finished version of the human genome sequence was published in October 2004 (Finishing the euchromatic sequence of the human genome) and the authors reported that the coding exons of protein-coding genes covered about 1.2% of the genome. However, the authors also noted that there are many genes for tRNAs, ribosomal RNAs, snoRNAs, microRNAs, and probably other functional RNAs. Although they don't mention it, the authors must also have been aware of regulatory sequences, centromeres, telomeres, origins of replication and possibly other functional elements. They never said that all noncoding DNA (98.8%) was junk because that would be ridiculous. It's even more ridiculous to say it in 2021 [Stop Using the Term "Noncoding DNA:" It Doesn't Mean What You Think It Means].

The part of the article that you can see also lists a few "Quick Takes" and one of them is ...

Close to 99 percent of our genome has been historically classified as noncoding, useless “junk” DNA. Consequently, these sequences were rarely studied.

This is also incorrect as many scientists have pointed out repeatedly over the past fifty years or so. At no time in the past 50 years has any knowledgeable scientist ever claimed that all noncoding DNA is junk. I'm sorely tempted to accuse the authors of this article of lying because they really should know better, especially if they're writing an article about junk DNA in 2021. However, I reluctantly defer to Hanlon's razor.

Mortola and Long claim that mammalian genomes have between 85% to 99% junk DNA and wonder if it could have a function.

To most geneticists, the answer was that it has no function at all. The flow of genetic information—the central dogma of molecular biology—seems to leave no role for all of our intergenic sequences. In the classical view, a gene consists of a sequence of nucleotides of four possible types--adenine, cytosine, guanine, and thymine--represented by the letters A, C, G, and T. Three nucleotides in a row make up a codon, with each codon corresponding to a specific amino acid, or protein subunit, in the final protein product. In active genes, harmful mutations are weeded out by selection and beneficial ones are allowed to persist. But noncoding regions are not expressed in the form of a protein, so mutations in noncoding regions can be neither harmful nor beneficial. In other words, "junk" mutations cannot be steered by natural selection.

Those of you who have read this far will cringe when reading that. There are so many obvious errors in that paragraph that applying Hanlon's razor seems very complimentary. Imagine saying in the 21st centurey that the Central Dogma leaves no role at all for regulatory sequences or ribosomal RNA genes! But there's more; the authors double-down on their incorrect understanding of "gene" in order to fit their misunderstanding of the Central Dogma.

Five Things You Should Know if You Want to Participate in the Junk DNA DebateWhat Is a Gene, Really?

In our de novo gene studies in rice, to truly assess the potential significance of de novo genes, we relied on a strict definition of the word "gene" with which nearly every expert can agree. First, in order for a nucleotide sequence to be considered a true gene, an open reading frame (ORF) must be present. The ORF can be thought of as the "gene itself"; it begins with a starting mark common for every gene and ends with one of three possible finish line signals. One of the key enzymes in this process, the RNA polymerase, zips along the strand of DNA like a train on a monorail, transcribing it into its messenger RNA form. This point brings us to our second important criterion: A true gene is one that is both transcribed and translated. That is, a true gene is first used as a template to make transient messenger RNA, which is then translated into a protein.

The authors admit in the next paragraph that some pseudogenes may produce functional RNAs that are never translated into proteins but they don't mention any other types of gene. I can understand why you might concentrate on protein-coding genes if you are studying de novo genes but why not just say that there are two types of genes and either one can arise de novo? But there's another problem with their definition: they left out a key property of a gene. It's not sufficient that a given stretch of DNA is transcribed and the RNA is translated to make a protein: the protein has to have a function before you can say that the stretch of DNA is a gene [What Is a Gene?]. We'll see in a minute why this is important.

The main point of the paper is the birth of de novo genes and the authors discuss their work with the rice genome. They say they've discovered 175 de novo genes but they don't say how many have a real biological function. This is an important problem in this field and it would have been fascinating to see a description of how they go about assigning a function to their, mostly small, pepides [The evolution of de novo genes]. I'm guessing that they just assume a function as soon as they recognize an open reading frame in a transcript.

As you can see from the title of the article, the emphasis is on the idea that de novo genes can arise from junk DNA—a concept that's not seriously disputed. The one good thing about the article is that the authors do not directly state that the reason for junk DNA is to give rise to new genes but this caption is troubling.

The Human Genome Project was a 13-year-long research effort aimed at mapping the entire human genetic sequence. One of its most intriguing findings was the observation that the number of protein-coding genes estimated to exist in humans--approximately 22,300--represents a mere 1.2 percent of our whole genome, with the other 98.8 percent being categorized as noncoding, useless junk. Analyses of this presumed junk DNA in diverse species are now revealing its role in the creation of genes.

Why do science writers continue to spread misinformation about junk DNA when there's so much correct information out there? All you have to do is look [More misconceptions about junk DNA - what are we doing wrong?].

Saturday, April 12, 2025

Templeton Foundation funds a grant on transposons

Templeton recently awarded a grant of $607,686 (US) to study the role of transposons in the human genome. The project leader is Stefan Linquist, a philosopher from the University of Guelph (Guelph, Ontario, Canada). Stefan has published a number of papers on junk DNA and he promotes the definition of functional DNA as DNA that is subject to purifying selection [The function wars are over]. Other members of the team include Ryan Gregory and Ford Doolittle who are prominent supporters of junk DNA.

Saturday, July 29, 2023

How could a graduate student at King's College in London not know the difference between junk DNA and non-coding DNA?

There's something called "the EDIT lab blog" written by people at King's College In London (UK). Here's a recent post (May 19, 2023) that was apparently written by a Ph.D. student: J for Junk DNA Does Not Exist!.

It begins with the standard false history,

The discovery of the structure of DNA by James Watson and Francis Crick in 1953 was a milestone in the field of biology, marking a turning point in the history of genetics (Watson & Crick, 1953). Subsequent advances in molecular biology revealed that out of the 3 billion base pairs of human DNA, only around 2% codes for proteins; many scientists argued that the other 98% seemed like pointless bloat of genetic material and genomic dead-ends referred to as non-coding DNA, or junk DNA – a term you’ve probably come across (Ohno, 1972).

You all know what's coming next. The discovery of function in non-coding DNA overthrew the concept of junk DNA and ENCODE played a big role in this revolution. The post ends with,

Nowadays, researchers are less likely to describe any non-coding sequences as junk because there are multiple other and more accurate ways of labelling them. The discussion over non-coding DNA’s function is not over, and it will be long before we understand our whole genome. For many researchers, the field’s best way ahead is keeping an open mind when evaluating the functional consequences of non-coding DNA and RNA, and not to make assumptions about their biological importance.

As Sandwalk readers know, there was never a time when knowledgeable scientists said that all non-coding DNA was junk. They always knew that there was functional DNA outside of coding regions. Real open-minded scientists are able to distinguish between junk DNA and non-coding DNA and they are able to evaluate the evidence for junk DNA without dismissing it based on a misunderstanding of the history of the subject.

The question is why would a Ph.D. student who makes the effort to write a blog post on junk DNA not take the time to read up on the subject and learn the proper definition of junk and the actual evidence? Why would their supervisors and other members of the lab not know that this post is wrong?

Thursday, April 07, 2011

IDiots vs Francis Collins

There is a lot of positive evidence that much of the DNA in our genome is non-functional. Wells is dead wrong about this. Furthermore, assuming that this junk DNA is non-functional and assuming that species share a common ancestor, we can explain many observations about genomes. IDiots can't do this. They have yet to provide an explanation for shared pseudogenes1 in the chimp and human genomes, for example. And I haven't heard any IDiot explain why the primate genomes are chock full of Alu sequences derived from a particular rearranged 7SL RNA sequence while rodent genomes have SINES from a different rearranged 7SL sequence and lots of others from a tRNA pseudogene [Junk in Your Genome: SINES].

Did Francis Collins use the existence of junk DNA as support for Darwin's theory of evolution? Here's what Wells says in the video (50 second mark).

If fact he relies on so-called junk DNA—sequences of DNA that apparently have no function—as evidence that Darwin's theory explains everything we see in living things.I searched in the The Language of God for proof that Wells is correct. The best example I could find is from pages 129-130 where Collins describes the results from comparing DNA sequences of different organisms. He points out that you can compare coding regions and detect similarities between humans and other mammals and even yeast and bacteria. On the other hand, if you look at non-coding regions the similarities fall off rapidly so that there's almost no similarity between human DNA and non-mammalian genomes (e.g. chicken). This is powerful support for "Darwin's theory of evolution" according to Francis Collins. First, because you can construct phylogenetic trees based on DNA sequences and ...

Second, within the genome, Darwin's theory predicts that mutations that do not affect function (namely, those located in "junk DNA") will accumulate steadily over time. Mutations in the coding regions of genes, however, are expected to be observed less frequently, since most of these will be deleterious, and only a rare such event will provide a selective advantage and be retained during the evolutionary process. That is exactly what is observed.I leave it as an exercise for Sandwalk readers to figure out how to explain this observation if the regions that accumulate fixed mutations aren't really junk but functional DNA. Your explanation should consist of two parts: (1) why the DNA is functional even though the sequence isn't conserved (provide evidence)2, and (2) why coding regions show fewer changes and why comparisons of different species lead to a tree-like organization.

I wasn't able to find where in Origin of Species Darwin discuss this prediction but I'm sure it must be there somewhere. Perhaps some kind reader can supply the page numbers?

1. Every knowledgeable, intelligent biologist knows that pseudogenes exist and they are junk. That's not in dispute. I haven't heard any Intelligent Design Creationists admit that there are thousands of functionless pseudogenes in our genome.

2. I can think of two or three possibilities but no evidence to support them.

Tuesday, November 05, 2013

Stop Using the Term "Noncoding DNA:" It Doesn't Mean What You Think It Means

He is also the senior author on a paper I blogged about last week—the one where some journalists made a big deal about junk DNA when there was nothing in the paper about junk DNA [How to Turn a Simple Paper into a Scientific Breakthrough: Mention Junk DNA].

Dan Graur contacted him by email to see if he had any comment about this misrepresentation of his published work and he defended the journalist. Here's the email response from Axel Visel to Dan Gaur.

Saturday, March 21, 2015

Junk DNA comments in the New York Times Magazine

Thursday, November 29, 2007

More Misconceptions About Junk DNA

iayork of Mystery Rays from Outer Space is upset about an article on retroviruses that appeared in The New Yorker [ “Darwin’s Surprise” in the New Yorker]. Here's what iayork says,

The whole “junk DNA” has been thrashed out a dozen times (see Genomicron for a good start). The bottom line? If you search Pubmed for the phrase “junk DNA” you will find a total of 80 articles (compare to, say, 985 articles for “endogenous retrovirus”); and a large fraction of those 80 articles only use the phrase to explain what a poor term it is. Scientists don’t use the term “junk DNA’. Lazy journalists use it so they can sneer at scientists (who don’t use it) for using it.Wrong! Lots of scientists use the term "junk DNA." Properly, understood, it's a very useful term and has been for several decades [Noncoding DNA and Junk DNA].

Yes, it's true that journalists often don't understand junk DNA and they are easily tricked into thinking that junk DNA is a discredited concept. The journalists are wrong, not the scientists who use the term.

It's also true that there are many scientists who feel uneasy about junk DNA because it doesn't fit with their adaptationist leanings. Just because there's controversy doesn't mean that the term isn't still used by it's proponents (I am one).

I'm sorry, iayork, but statements like that don't help in educating people about science. Junk DNA is that fraction of a genome that has no known function and based on everything we know about biology is unlikely to have some unknown function. Junk DNA happens to represent more than 90% of our genome and that's significant by my standards.

Monday, July 11, 2016

A genetics professor who rejects junk DNA

He explains why he is a Christian and why he is "more than his genes" in Am I more than my genes? Faith, identity, and DNA.

Here's the opening paragraph ...

The word “genome” suggests to many that our DNA is simply a collection of genes from end-to-end, like books on a bookshelf. But it turns out that large regions of our DNA do not encode genes. Some once called these regions “junk DNA.” But this was a mistake. More recently, they have been referred to as the “dark matter” of our genome. But what was once dark is slowly coming to light, and what was once junk is being revealed as treasure. The genome is filled with what we call “control elements” that act like switches or rheostats, dialing the activation of nearby genes up and down based on whatever is needed in a particular cell. An increasing number of devastating complex diseases, such as cancer, diabetes, and heart disease, can often be traced back, in part, to these rheostats not working properly.

Tuesday, August 06, 2024

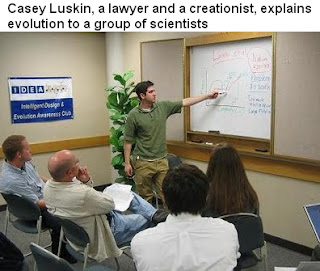

Is Casey Luskin lying about junk DNA or is he just stupid?

I'm going to address a recent article by Casey Luskin on Evolution News (sic) and a podcast on a Current Topics in Science podcast produce by Christ Jesus Ministries. But first, some background.

A recent paper in Nature looked at a region on chromosome 21 where mutations associated with autoimmune and inflammatory disease were clustered. This region did not contain any known genes and is referred to in the paper as a "gene desert." The authors reasoned that it probably contained one or more regulatory sites and, as expected, they were able to identify an enhancer element that helps control expression of a nearby gene called ETS2 (Stankey et al., 2024).

The results were promoted in a BBC article: The 'gene deserts' unravelling the mysteries of disease. The subtitle of the article tells you where this is going, "Mutations in these regions of so-called "junk" DNA are increasingly being linked to a range of diseases, from Crohn's to cancer." The article implies that since only 2% of the human genome codes for proteins the remaining 98% "has no obvious meaning or purpose." The caption to one of the figures says, "Gene deserts are regions of so-called genetic "junk" that do not code for proteins – but they may play an important role in disease." Thus, according to the BBC, the discovery of a regulatory sequence conflicts with the idea of junk DNA.

There's no mention of junk DNA in the original Nature article and none of the comments by the senior author (James Lee) in the BBC article suggest that he is confused about junk DNA.

An article published in Nature Communications looked at expression of human endogenous retrovirus elements (HERV's) in human brain. The authors found that expression of two HERV sequences is associated with risk for schizophrenia but the authors noted that is wasn't clear how this expression played a role in psychiatric disorders (Duarte et al., 2024)

Although the term "junk DNA" was not mentioned in the original article, the press release from King's College, London makes the point that HERVS were assumed to be junk DNA. The implication is that this is one of the first publications to discover a possible function for this junk DNA. (Functional elements derived from HERVs have been known for three decades.)

Casey Luskin wrote about these studies yesterday in an article on the intelligent design website: Disease-Associated “Junk” DNA Is Evidence of Function and talks about it in the podcast that I link to below.

Luskin continues to promote the false claim that all non-coding DNA was assumed to be junk. That allows him to highlight all studies that discover new functional elements in non-coding DNA and claim that it refutes junk DNA. He's been doing this for years in spite of multiple attempts to correct him. Therefore, the answer to the question in the title in obvious, he is a liar—judge for yourselves whether he is also stupid.

Duarte et al. (2024) Integrating human endogenous retroviruses into transcriptome-wide association studies highlights novel risk factors for major psychiatric conditions. Nature Communications 15: 3803 [doi: 10.1038/s41467-024-48153-z]

Stankey et al. (2024) A disease-associated gene desert directs macrophage inflammation through ETS2. Nature 630: 447–456 [doi: 10.1038/s41586-024-07501-1]

Thursday, September 20, 2012

Are All IDiots Irony Deficient?

You might think that distinguishing between these two types of expert scientists would be a real challenge and you would be right. Let's watch how David Klinghoffer manoeuvres through this logical minefield at: ENCODE Results Separate Science Advocates from Propagandists. He begins with ....

"I must say," observes an email correspondent of ours, who is also a biologist, "I'm getting a kick out of watching evolutionary biologists attack molecular biologists for 'hyping' the ENCODE results."

True, and equally enjoyable -- in the sense of confirming something you strongly suspected already -- is seeing the way the ENCODE news has drawn a bright line between voices in the science world that care about science and those that are more focussed on the politics of science, even as they profess otherwise.

Wednesday, March 13, 2024

Nils Walter disputes junk DNA: (7) Conservation of transcribed DNA

I'm discussing a recent paper published by Nils Walter (Walter, 2024). He is arguing against junk DNA by claiming that the human genome contains large numbers of non-coding genes.

This is the seventh post in the series. The first one outlines the issues that led to the current paper and the second one describes Walter's view of a paradigm shift/shaft. The third post describes the differing views on how to define key terms such as 'gene' and 'function.' In the fourth post I discuss his claim that differing opinions on junk DNA are mainly due to philosophical disagreements. The fifth and sixth posts address specific arguments in the junk DNA debate.

- Nils Walter disputes junk DNA: (1) The surprise

- Nils Walter disputes junk DNA: (2) The paradigm shaft

- Nils Walter disputes junk DNA: (3) Defining 'gene' and 'function'

- Nils Walter disputes junk DNA: (4) Different views of non-functional transcripts

- Nils Walter disputes junk DNA: (5) What does the number of transcripts per cell tell us about function?

- Nils Walter disputes junk DNA: (6) The C-value paradox

Sequence conservation

If you don't know what a transcript is doing then how are you going to know whether it's a spurious transcript or one with an unknown function? One of the best ways is to check and see whether the DNA sequence is conserved. There's a powerful correlation between sequence conservation and function: as a general rule, functional sequences are conserved and non-conserved sequences can be deleted without consequence.

There might be an exception to the the conservation criterion in the case of de novo genes. They arise relatively recently so there's no history of conservation. That's why purifying selection is a better criterion. Now that we have the sequences of thousands of human genomes, we can check to see whether a given stretch of DNA is constrained by selection or whether it accumulates mutations at the rate we expect if its sequence were irrelevant junk DNA (neutral rate). The results show that less than 10% of our genome is being preserved by purifying selection. This is consistent with all the other arguments that 90% of our genome is junk and inconsistent with arguments that most of our genome is functional.

This sounds like a problem for the anti-junk crowd. Let's see how it's addressed in Nils Walter's article in BioEssays.

There are several hand-waving objections to using conservation as an indication of function and Walter uses them all plus one unique argument that we'll get to shortly. Let's deal with some of the "facts" that he discusses in his defense of function. He seems to agree that much of the genome is not conserved even though it's transcribed. In spite of this, he says,

"... the estimates of the fraction of the human genome that carries function is still being upward corrected, with the best estimate of confirmed ncRNAs now having surpassed protein-coding genes,[12] although so far only 10%–40% of these ncRNAs have been shown to have a function in, for example, cell morphology and proliferation, under at least one set of defined conditions."

This is typical of the rhetoric in his discussion of sequence conservation. He seems to be saying that there are more than 20,000 "confirmed" non-coding genes but only 10%-40% of them have been shown to have a function! That doesn't make any sense since the whole point of this debate is how to identify function.

Here's another bunch of arguments that Walter advances to demonstrate that a given sequence could be functional but not conserved. I'm going to quote the entire thing to give you a good sense of Walter's opinion.

A second limitation of a sequence-based conservation analysis of function is illustrated by recent insights from the functional probing of riboswitches. RNA structure, and hence dynamics and function, is generally established co-transcriptionally, as evident from, for example, bacterial ncRNAs including riboswitches and ribosomal RNAs, as well as the co-transcriptional alternative splicing of eukaryotic pre-mRNAs, responsible for the important, vast diversification of the human proteome across ∼200 cell types by excision of varying ncRNA introns. In the latter case, it is becoming increasingly clear that splicing regulation involves multiple layers synergistically controlled by the splicing machinery, transcription process, and chromatin structure. In the case of riboswitches, the interactions of the ncRNA with its multiple protein effectors functionally engage essentially all of its nucleotides, sequence-conserved or not, including those responsible for affecting specific distances between other functional elements. Consequently, the expression platform—equally important for the gene regulatory function as the conserved aptamer domain—tends to be far less conserved, because it interacts with the idiosyncratic gene expression machinery of the bacterium. Consequently, taking a riboswitch out of this native environment into a different cell type for synthetic biology purposes has been notoriously challenging. These examples of a holistic functioning of ncRNAs in their species-specific cellular context lay bare the limited power of pure sequence conservation in predicting all functionally relevant nucleotides.

I don't know much about riboswitches so I can't comment on that. As for alternative splicing, I assume he's suggesting that much of the DNA sequence for large introns is required for alternative splicing. That's just not correct. You can have effective alternative splicing with small introns. The only essential parts of introns sequences are the splice sites and a minimum amount of spacer.

Part of what he's getting at is the fact that you can have a functional transcript where the actual nucleotide sequence doesn't matter so it won't look conserved. That's correct. There are such sequences. For example, there seem to be some examples of enhancer RNAs, which are transcripts in the regulatory region of a gene where it's the act of transcription that's important (to maintain an open chromatin conformation, for example) and not the transcript itself. Similarly, not all intron sequences are junk because some spacer sequence in required to maintain a minimum distance between splice sites. All this is covered in Chapter 8 of my book ("Noncoding Genes and Junk RNA").

Are these examples enough to toss out the idea of sequence conservation as a proxy for function and assume that there are tens of thousands of such non-conserved genes in the human genome? I think not. The null hypothesis still holds. If you don't have any evidence of function then the transcript doesn't have a function—you may find a function at some time in the future but right now it doesn't have one. Some of the evidence for function could be sequence conservation but the absence of conservation is not an argument for function. If conservation doesn't work then you have to come up with some other evidence.

It's worth mentioning that, in the broadest sense, purifying selection isn't confined to nucleotide sequence. It can also take into account deletions and insertions. If a given region of the genome is deficient in random insertions and deletions then that's an indication of function in spite of the fact that the nucleotide sequence isn't maintained by purifying selection. The maintenance definition of function isn't restricted to sequence—it also covers bulk DNA and spacer DNA.

(This is a good time to bring up a related point. The absence of conservation (size or sequence) is not evidence of junk. Just because a given stretch of DNA isn't maintained by purifying selection does not prove that it is junk DNA. The evidence for a genome full of junk DNA comes from different sources and that evidence doesn't apply to every little bit of DNA taken individually. On the other hand, the maintenance function argument is about demonstrating whether a particular region has a function or not and it's about the proper null hypothesis when there's no evidence of function. The burden of proof is on those who claim that a transcript is functional.)

This brings us to the main point of Walter's objection to sequence conservation as an indication of function. You can see hints of it in the previous quotation where he talks about "holistic functioning of ncRNAs in their species-specific cellular context," but there's more ...

Some evolutionary biologists and philosophers have suggested that sequence conservation among genomes should be the primary, or perhaps only, criterion to identify functional genetic elements. This line of thinking is based on 50 years of success defining housekeeping and other genes (mostly coding for proteins) based on their sequence conservation. It does not, however, fully acknowledge that evolution does not actually select for sequence conservation. Instead, nature selects for the structure, dynamics and function of a gene, and its transcription and (if protein coding) translation products; as well as for the inertia of the same in pathways in which they are not involved. All that, while residing in the crowded environment of a cell far from equilibrium that is driven primarily by the relative kinetics of all possible interactions. Given the complexity and time dependence of the cellular environment and its environmental exposures, it is currently impossible to fully understand the emergent properties of life based on simple cause-and-effect reasoning.

The way I see it, his most important argument is that life is very complicated and we don't currently understand all of it's emergent properties. This means that he is looking for ways to explain the complexity that he expects to be there. The possibility that there might be several hundred thousand regulatory RNAs seems to fulfil this need so they must exist. According to Nils Walter, the fact that we haven't (yet) proven that they exist is just a temporary lull on the way to rigorous proof.

This seems to be a common theme among those scientists who share this viewpoint. We can see it in John Mattick's writings as well. It's as though the logic of having a genome full of regulatory RNA genes is so powerful that it doesn't require strong supporting evidence and can't be challenged by contradictory evidence. The argument seems somewhat mystical to me. Its proponents are making the a priori assumption that humans just have to be a lot more complicated than what "reductionist" science is indicating and all they have to do is discover what that extra layer of complexity is all about. According to this view, the idea that our genome is full of junk must be wrong because it seems to preclude the possibility that our genome could explain what it's like to be human.

Walter, N.G. (2024) Are non‐protein coding RNAs junk or treasure? An attempt to explain and reconcile opposing viewpoints of whether the human genome is mostly transcribed into non‐functional or functional RNAs. BioEssays:2300201. [doi: 10.1002/bies.202300201]

Thursday, May 30, 2013

What Does the Bladderwort Genome Tell Us about Junk DNA?

The so-called "C-Value Paradox" was described over thirty years ago. Here's how Benjamin Lewin explained it in Genes II (1983).

The so-called "C-Value Paradox" was described over thirty years ago. Here's how Benjamin Lewin explained it in Genes II (1983).The C value paradox takes its name from our inability to account for the content of the genome in terms of known function. One puzzling feature is the existence of huge variations in C values between species whose apparent complexity does not vary correspondingly. An extraordinary range of C values is found in amphibians where the smallest genomes are just below 109bp while the largest are almost 1011. It is hard to believe that this could reflect a 100-fold variation in the number of genes needed to specify different amphibians.Since then we have dozens and dozens of examples of very similar looking species with vastly different genome sizes. These observations require an explanation and the best explanation by far is that most of the genomes of multicellular species is junk. In fact, it's the data on genome sizes that provide the best evidence for junk DNA.

Monday, December 19, 2011

Jonathan McLatchie and Junk DNA

THEME

Genomes & Junk DNA

Jonathan McLatchie takes on PZ Myers in a spirited attack on junk DNA [Treasure in the Genetic Goldmine: PZ Myers Fails on "Junk DNA"]. The Intelligent Design Creationists are convinced that most of our genome is functional because that's what a good designer would create. They claim that junk DNA is a myth and their "evidence" is selective quotations from the scientific literature. They ignore the big picture, as they so often due.

I discussed most of the creationist arguments in my review of The Myth of Junk DNA.

Jonathan McLatchie analyzes three argument made by PZ Myers in his presentation at Skepticon IV. In that talk PZ said that introns are junk, telomeres are junk, and transposons are junk. I have already stated that I diasgree with PZ on these points [see PZ Myers Talks About Junk DNA]. Now I want to be clear on why Jonathan McLatchie is wrong.

- Introns are mostly junk. I think PZ exaggerated a bit when he dismissed all introns as junk. My position is that we should treat introns as functional elements of a gene even though many (but not all) of them could probably be deleted without affecting the survival of the species. Each intron has about 50-80 bp of essential information that's required for proper splicing [Junk in Your Genome: Protein-Encoding Genes]. The rest of the intron, which can be thousand of base pairs in length, is mostly junk [Junk in Your Genome: Intron Size and Distribution]. Some introns contain essential gene regulatory regions and some contain essential genes. That does not mean that all intron sequences are functional.

- Telomeres are not junk. I don't think telomeres are junk [Telomeres]. They are absolutely required for proper DNA replication. PZ Myers agrees that telomeres (and centromeres) are functional DNA (28 minutes into the talk). Jonathan McLatchie claims that PZ describes telomeres as junk DNA, "Myers departs from the facts, however, when he asserts that these telomeric repetitive elements are non-functional." McLatchie is not telling the truth.

- Defective Transposons are Junk. PZ Myers talks about transposons as mobile genetic elements and states that transposons make up more than half of our genome. That's all junk according to PZ Myers. My position is that the small number of active transposons are functional selfish genes and the real junk is the defective transposon sequences that make up most of the genome [Transposon Insertions in the Human Genome]. Thus, I differ a bit from PZ's position. Jonathan McLatchie, like Jonathan Wells, argues that because the occasional defective transposon in the odd species has acquired a function, this means that most of the defective transposon sequences (~50% of the genome) are functional. This is nonsense.

[Image Credit: The image shows human chromosomes labelled with a telomere probe (yellow), from Christoher Counter at Duke University.]

Monday, September 10, 2012

Science Writes Eulogy for Junk DNA

It doesn't take much imagination to guess what Elizabeth Pennisi is going to write when she heard about the new ENCODE Data. Yep, you guessed it. She says that the ENCODE Project Writes Eulogy for Junk DNA.

THEME

Genomes & Junk DNALet's look at the opening paragraph in her "eulogy."

When researchers first sequenced the human genome, they were astonished by how few traditional genes encoding proteins were scattered along those 3 billion DNA bases. Instead of the expected 100,000 or more genes, the initial analyses found about 35,000 and that number has since been whittled down to about 21,000. In between were megabases of “junk,” or so it seemed.

Saturday, October 05, 2013

Barry Arrington, Junk DNA, and Why We Call Them Idiots

I'm reminded of the word "pathos" but I had to look it up to make sure I got it right. It means something that causes people to feel pity, sadness, or even compassion. It's the right word to describe what's happening. It's also similar to the word "pathetic."

Here's what's happening.

As you know, Barry Arrington claimed that the IDiots made a prediction. They predicted that there's no such thing as junk DNA. They predicted that most of our genome would turn out to have a function [Let’s Put This One To Rest Please]. That's much is true. It makes perfect sense because an Intelligent Design Creator wouldn't create a genome that was 90% junk.

Tuesday, May 24, 2011

Junk & Jonathan: Part 6—Chapter 3

This is part 6 of my review of The Myth of Junk DNA. For a list of other postings on this topic see the link to Genomes & Junk DNA in the "theme box" below or in the sidebar under "Themes."

This is part 6 of my review of The Myth of Junk DNA. For a list of other postings on this topic see the link to Genomes & Junk DNA in the "theme box" below or in the sidebar under "Themes."We learn in Chapter 9 that Wells has two categories of evidence against junk DNA. The first covers evidence that sequences probably have a function and the second covers specific known examples of functional sequences. In the first category there are two lines of evidence: transcription and conservation. Both of them are covered in Chapter 3 making this one of the most important chapters in the book. The remaining category of specific examples is described in Chapters 4-7.

The title of Chapter 3 is Most DNA Is Transcribed into RNA. As you might have anticipated, the focus of Wells' discussion is the ENCODE pilot project that detected abundant transcription in the 1% of the genome that they analyzed (ENCODE Project Consortium, 2007). Their results suggest that most of the genome is transcribed. Other studies support this idea and show that transcripts often overlap and many of them come from the opposite strand in a gene giving rise to antisense RNAs.

The original Nature paper says,

... our studies provide convincing evidence that the genome is pervasively transcribed, such that the majority of its bases can be found in primary transcripts, including non-protein-coding transcripts, and those that extensively overlap one another.The authors of these studies firmly believe that evidence of transcription is evidence of function. This has even led some of them to propose a new definition of a gene [see What is a gene, post-ENCODE?]. There's no doubt that many molecular biologists take this data to mean that most of our genome has a function and that's the same point that Wells makes in his book. It's evidence against junk DNA.

What are these transcripts doing? Wells devotes a section to "Specific Functions of Non-Protein-Coding RNAs." These RNAs may be news to most readers but they are well known to biochemists and molecular biologists. This is not the place to describe all the known functional non-coding RNAs but keep in mind that there are three main categories: ribosomal RNA (rRNA), transfer RNA (tRNA), and a heterogeneous category called small RNAs. There are dozens of different kinds of small RNAs including unique ones such as the 7SL RNA of signal recognition factor, the P1 RNA of RNAse P and the guide RNA in telomerase. Other categories include the spliceosome RNAs, snoRNAs, piRNAs, siRNAs, and miRNAs. These RNAs have been studied for decades. It's important to note that the confirmed examples are transcribed from genes that make up less than 1% of the genome.

One interesting category is called "long noncoding RNAs" or lncRNAs. As the name implies, these RNAs are longer that the typical small RNAs. Their functions, if any, are largely unknown although a few have been characterized. If we add up all the genes for these RNAs and assume they are functional it will account for about 0.1% of the genome so this isn't an important category in the discussion about junk DNA.

Theme

Genomes

& Junk DNASo, we're left with a puzzle. If more than 90% of the genome is transcribed but we only know about a small number of functional RNAs then what about the rest?

Opponents of junk DNA—both creationists and scientists—would have you believe that there's a lot we don't know about genomes and RNA. They believe that we will eventually find functions for all this RNA and prove that the DNA that produces them isn't junk. This is a genuine scientific controversy. What do their scientific opponents (I am one) say about the ENCODE result?

Criticisms of the ENCODE analysis take two forms ...

- The data is wrong and only a small fraction of the genome is transcribed

- The data is mostly correct but the transcription is spurious and accidental. Most of the products are junk RNA.

Several papers have appeared that call into question the techniques used by the ENCODE consortium. They claim that many of the identified transcribed regions are artifacts. This is especially true of the repetitive regions of the genome that make up more than half of the total content. If any one of these regions is transcribed then the transcript will likely hybridize to the remaining repeats giving a false impression of the amount of DNA that is actually transcribed.

Of course, Wells doesn't mention any of these criticisms in Chapter 3. In fact, he implies that every published paper is completely accurate in spite of the fact that most of them have never been replicated and many have been challenged by subsequent work. The readers of The Myth of Junk DNA will assume, intentionally or otherwise, that if a paper appears in the scientific literature it must be true.

But criticism of the ENCODE results are so widespread that they can't be ignored so Wells is forced to deal with them in Chapter 8. (Why not in Chapter 3 when they are first mentioned?) In particular, Wells has to address the van Bakel et al. (2010) paper from Tim Hughes' lab here in Toronto. This paper was widely discussed when it came out last year [see: Junk RNA or Imaginary RNA?]. We'll deal with it when I cover Chapter 9 but, suffice to say, Wells dismisses the criticism.

Criticisms of the Interpretation

The other form of criticism focuses on the interpretation of the data rather than its accuracy. Most of us who teach transcription take pains to point out to our students that RNA polymerase binds non-specifically to DNA and that much of this binding will result in spurious transcription at a very low frequency. This is exactly what we expect from a knowledge of transcription initiation [How RNA Polymerase Binds to DNA]. The ENCODE data shows that most of the genome is "transcribed" at a frequency of once every few generations (or days) and this is exactly what we expect from spurious transcription. The RNAs are non-functional accidents due to the sloppiness of the process [Useful RNAs?].

Wells doesn't mention any of this. I don't know if that's because he's ignorant of the basic biochemistry and hasn't read the papers or whether he is deliberately trying to mislead his readers. It's probably a bit of both.

It's not as if this is some secret known only to the experts. The possibility of spurious transcription has come up frequently in the scientific literature in the past few years. For example, Guttmann et al. (2009) write,

Genomic projects over the past decade have used shotgun sequencing and microarray hybridization to obtain evidence for many thousands of additional non-coding transcripts in mammals. Although the number of transcripts has grown, so too have the doubts as to whether most are biologically functional. The main concern was raised by the observation that most of the intergenic transcripts show little to no evolutionary conservation. Strictly speaking, the absence of evolutionary conservation cannot prove the absence of function. But the remarkably low rate of conservation seen in the current catalogues of large non-coding transcripts (less than 5% of cases) is unprecedented and would require that each mammalian clade evolves its own distinct repertoire of non-coding transcripts. Instead, the data suggest that the current catalogues may consist largely of transcriptional noise, with a minority of bona fide functional lincRNAs hidden amid this background.This paper is in the Wells reference list so we know that he has read it.

What these authors are saying is that the data is consistent with spurious transcription (noise). Part of the evidence is the lack of any sequence conservation among the transcripts. It's as though they were mostly derived from junk DNA.

Sequence Conservation

Recall that the purpose of Chapter 3 is to show that junk DNA is probably functional. The first part of the chapter reportedly shows that most of our genome is transcribed. The second part addresses sequence conservation.

Here's what Wells says about sequence conservation.

Widespread transcription of non-protein-coding DNA suggests that the RNAs produced from such DNA might serve biological functions. Ironically, the suggestion that much non-protein-coding DNA might be functional also comes from evolutionary theory. If two lineages diverge from a common ancestor that possesses regions of non-protein-coding DNA, and these regions are really nonfunctional, then they will accumulate random mutations that are not weeded out by natural selection. Many generations later, the sequences of the corresponding non-protein-coding regions in the two descendant lineages will probably be very different. [Due to fixation by random genetic drift—LAM] On the other hand, if the original non-protein-coding DNA was functional, then natural selection will tend to weed out mutations affecting that function. Many generations later, the sequences of the corresponding non-protein-coding regions in the two descendant lineages will still be similar. (In evolutionary terminology, the sequences will be "conserved.") Turning the logic around, Darwinian theory implies that if evolutionarily divergent organisms share similar non-protein-coding DNA sequences, those sequences are probably functional.Wells then references a few papers that have detected such conserved sequences, including the Guttmann et al. (2009) paper mentioned above. They found "over a thousand highly conserved large non-coding RNAs in mammals." Indeed they did, and this is strong evidence of function.1 Every biochemist and molecular biologist will agree. One thousand lncRNAs represent 0.08% of the genome. The sum total of all other conserved sequences is also less than 1%. Wells forgets to mention this in his book. He also forgets to mention the other point that Guttman et al. make; namely, that the lack of sequence conservation suggests that the vast majority of transcripts are non-functional. (Oops!)

There's irony here. We know that the sequences of junk DNA are not conserved and this is taken as evidence (not conclusive) that the DNA is non-functional. The genetic load argument makes the same point. We know that the vast majority of spurious RNA transcripts are also not conserved from species to species and this strongly suggests that those RNAs are not functional. Wells ignores this point entirely—it never comes up anywhere in his book. On the other hand, when a small percentage of DNA (and transcripts) are conserved, this gets prominent mention.

Wells doesn't believe in common ancestry so he doesn't believe that sequences are "conserved." (Presumably they reflect common design or something like that.) Nevertheless, when an evolutionary argument of conservation suits his purpose he's happy to invoke it, while, at the same time, ignoring the far more important argument about lack of conservation of the vast majority of spurious transcripts. Isn't that strange behavior?

The bottom line hear is that Jonathan Wells is correct to point to the ENCODE data as a problem for junk DNA proponents. This is part of the ongoing scientific controversy over the amount of junk in our genome. Where I fault Wells is his failure to explain to his readers that this is disputed data and interpretation. There's no slam-dunk case for function here. In fact, the tide seems to turning more and more against the original interpretation of the data. Most knowledgeable biochemists and molecular biologists do not believe that >90% of our genome is transcribed to produce functional RNAs.

UPDATE: How much of the genome do we expect to be transcribed on a regular basis? Protein-encoding genes account for about 30% of the genome, including introns (mostly junk). They will be transcribed. Other genes produce functional RNAs and together they cover about 3% of the genome. Thus, we expect that roughly a third of the genome will be transcribed at some time during development. We also expect that a lot more of the genome will be transcribed on rare occasions just because of spurious (accidental) transcription initiation. This doesn't count. Some pseudogenes, defective transposons, and endogenous retroviruses have retained the ability to be transcribed on a regular basis. This may account for another 1-2% of the genome. They produce junk RNA.

1. Conservation is not proof of function. In an effort to test this hypothesis Nöbrega et al. (2004) deleted two large regions of the mouse genome containing large numbers of sequences corresponding to conserved non-coding RNAs. They found that the mice with the deleted regions showed no phenotypic effects indicating that the DNA was junk. Jonathan Wells forgot to mention this experiment in his book.

Guttman, M. et al. (2009) Chromatin signature reveals over a thousand highly conserved non-coding RNAs in mammals. Nature 458:223-227. [NIH Public Access]

Nörega, M.A., Zhu, Y., Plajzer-Frick, I., Afzal, V. and Rubin, E.M. (2004) Megabase deletions of gene deserts result in viable mice. Nature 431:988-993. [Nature]

The ENCODE Project Consortium (2007) Nature 447:799-816. [PDF]

Saturday, January 06, 2024

Why do Intelligent Design Creationists lie about junk DNA?

A recent post on Evolution News (sic) promotes a a new podcast: Casey Luskin on Junk DNA’s “Kuhnian Paradigm Shift”. You can listen to the podcast here but most Sandwalk readers won't bother because they've heard it all before. [see Paradigm shifting.]

Luskin repeats the now familiar refrain of claiming that scientists used to think that all non-coding DNA was junk. Then he goes on to list recent discoveries showing that some of this non-coding DNA is functional. The truth is that no knowledgeable scientist ever claimed that all non-coding DNA was junk. The original idea of junk DNA was based on evidence that only 10% of the genome is functional and these scientists knew that coding regions occupied only a few percent. Thus, right from the beginning, the experts on genome evolution knew about all sorts of functional non-coding DNA such as regulatory sequences, non-coding genes, and other things.