The three main human databases (GENCODE/Ensembl, RefSeq, UniProtKB) contain a total of 22,210 protein-coding genes but only 19,446 of these genes are found in all three databases. That leaves 2764 potential genes that may or may not be real. A recent publication suggests that most of them are not real genes (Abascal et al., 2018). The issue is the same problem that I discussed in recent posts [Disappearing genes: a paper is refuted before it is even published] [Nature falls (again) for gene hype].

There are many ways of predicting protein-coding genes using various algorithms that look for open reading frames. The software is notorious for overpredicting genes leading to many false positives and that's why every new genome sequence contains hundreds of so-called "orphan" genes that lack homologues in other species. When these predicted genes are examined more closely they turn out to be artifacts—they are not functional genes.The human genome is the most intensely studied genome so you can be sure that every potential gene is closely examined. The result is that there are only a handful of potential orphan genes left in the databases. We can safely assume that if other genomes were examined with the same kind of scrutiny the number of genes would drop and the hype about orphan genes would disappear [How many genes do we have and what happened to the orphans?] [IDiots, suckers, and the octopus genome].

I like the opening sentence of the Abascal et al. paper ...

Before the human genome was sequenced, most researchers estimated that human protein coding gene numbers would be between 25 000 and 40 000, with some estimates closer to 100 000 genes.It's not perfect but it's the beginning of an attempt to correct the mythology that permeates the scientific literature. Instead of saying "most researchers" I would have said "most experts" and instead of saying "some estimates" I would have said "some researchers were making wild guesses as high as 100,000".

The authors go on to explain why it's so difficult to determine how many genes we have. In order to qualify as a real gene you have to show that the potential sequence has all the characteristics of real genes. In an ideal case, you have to show that the product has a function. This task is enormously difficult in the case of potential genes for noncoding RNAs. That's why estimates of noncoding genes range from a few thousand to more than 100,000. Right now, it's safe to say that the consensus view has narrowed to a few thousand confirmed examples with strong supporting evidence and about 5,000 more that are reasonable candidates [How many lncRNAs are functional? ].

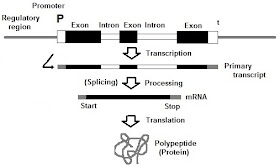

In theory, it's easier to identify real protein-coding genes and that's what the Abascal et al. paper is all about. There is only one way to confirm whether a potential protein-coding gene is real or not and that's manual curation. An expert scientist has to look at each gene and evaluate all the evidence. Here's how the authors describe the problem in their paper ....

The task of manually inspecting >20 000 annotated coding genes is enormous and the process has taken many years. Manual annotators have to accomplish two difficult tasks, detecting the remaining hard-to-find coding genes, and separating bona fide coding genes from misannotated pseudogenes and non-coding genes. Curators determine the status of the gene models based on transcript (ESTs and mRNAs) and protein data (from the main protein databases) available for each gene. Protein-coding potential depends first on whether an open reading frame (ORF) can be defined. However, the definition of ORFs is complicated by the fact that many noncoding transcripts may contain long ORFs by chance, particularly in GC-rich regions. In order to get round this problem, annotators also require some sort of protein evidence, such as whether the locus has sequence similarity to orthologues from other species, whether the resulting gene product contains Pfam functional domains, or whether experimental data is available from published papers, large-scale interaction studies or mass spectrometry experiments.The gold standard is proof that the gene produces a protein with a known function. The next best evidence is that the putative gene is under negative selection with respect to potential homologues in other species. (Keeping in mind that humans may harbor real de novo genes that aren't found in other species. These are genuine orphan genes.)

Genes and transcripts may change their status between releases as annotators adjust the annotation to the available evidence. A gene's status is updated based on the available evidence and this evidence can change over time. For example GENCODE manual annotators recently decided to reclassify as non-coding approximately 200 ‘orphan’ protein coding genes. Most of these genes were early in silico predictions.

The authors of this paper went to a lot of work to clean up the UniProtKB database to eliminate redundancies and obvious errors. They merged this database with the RefSeq database then compared the combined database to their highly curated GENCODE/Ensembl database. The three databases have different numbers of protein-coding genes.

- 20,266 GENCODE/Ensemble (v24)

- 21,212 UniProtKB (June 2016)

- 20,450 RefSeq 107

About 25% of the "excess" genes are annotated as pseudogenes by other databases. About 17% of them are antisense genes—genes that overlap real genes on the opposite strand. Many of the questionable genes are "read-through" genes characterized by transcripts that skip the last exon (containing a stop codon) and including exons from a neighboring gene. The GENCODE database had 41 duplicates that weren't previously recognized.

Potential genes were analyzed by looking at possible transcripts in a variety of tissues. Most of the questionable genes had complementary transcripts in only a few tissues and the levels of these RNAs were very low—well below the levels for confirmed genes.

Protein expression was analyzed by searching for peptides in various mass spec databases. Peptides were detected for only 6% of the questionable genes.

Evidence of negative selection was assayed by looking at variants (alleles) in 2504 human genomes. The questionable protein-coding genes had many more variants than real genes, suggesting that they are not under strong negative selection. Furthermore, the questionable genes had many more potentially deleterious mutations and more non-synonymous substitutions.

The results of all this analysis/curation is that there are about 19,500 protein-coding genes that are accepted by all three databases but that leaves several thousand potential protein-coding genes that may or may not be real genes. The authors also found 1470 genes in the core dataset that don't meet the highest standards for real genes. In total, there are 4234 potential genes out of 22,210 that may not be real protein-coding genes. There may only be 17,976 (22,210 - 4234 = 17,976) protein-coding genes in the human genome.

The range of support for questionable genes based on transcripts, proteins, and genetic variation ranges from highly probable to very unlikely. The authors warn against including questionable genes in a database because errors can be propagated. They say,

The set of human coding genes needs to be as complete as possible for biomedical experiments, but inevitably some genes will be misannotated as coding. Once a gene has entered a reference set it may be propagated in large-scale databases and its coding potential may end up being validated via circular annotation. Detecting errors, retracing steps and rescinding the coding status of a gene once it is annotated as coding is a difficult process, so a system to catch and label genes that have conflicting or insufficient coding support is useful.

Abascal, F., Juan, D., Jungreis, I., Martinez, L., Rigau, M., Rodriguez, J.M., Vazquez, J., and Tress, M.L. (2018) Loose ends: almost one in five human genes still have unresolved coding status. Nucleic acids research, published online June 30, 2018. [doi: 10.1093/nar/gky587]

"The next best evidence is that the putative gene is under negative selection with potential homologues in other species."

ReplyDeleteDon't you mean purifying selection? I take negative selection to mean it is selected against.

If it's a real gene there will be less variation than if the sequence is junk and evolving neutrally. In other words, the real gene will be "conserved" because it's under negative selection.

DeleteI edited the phrase "under negative selection with potential homologues" to "under negative selection with respect to potential homologues."

DeleteThanks for discussing our paper, Larry, it's a fair summary of it!

ReplyDeleteThe only bit which isn't right is "Evidence of positive selection was assayed by looking at variants (alleles) in 2504 human genomes. The questionable protein-coding genes had many more variants than real genes, suggesting that they are not under strong positive selection. “ We looked for evidence of negative selection (=purifying selection), not positive selection. When you don't see evidence for negative selection there may be three causes: a) lack of power ; b) positive selection; c) neutral evolution. In our case we could discard lack of power because many genes were tested altogether. We cannot entirely rule out positive selection, but is very unlikely that positive selection is acting on so many strange genes. The most parsimonious interpretation is that most of those genes are evolving neutrally in the human population (at least neutrally with respect to the mode of evolution of protein-coding genes; they may be noncoding genes evolving under purifying selection, we cannot say anything about that)

I should also probably point out that the work that we had to do to clean up UniProt was not because it had more errors, but because UniProt is a protein-centric database, while GENCODE and RefSeq are genome coordinate-based. There wasn't always a 1-to-1 equivalence between Uniprot protein entries and RefSeq/GEMCODE gene entries and this often had to be fixed manually. Thanks again.

DeleteThank-you for pointing out the typo. I fixed it.

DeleteProfessor Moran

ReplyDeleteHow many different types of proteins have a human been adult?

20,000 ? and how many other molecules?

Thanks

Sorry by my english im from spain

Professor Moran

DeleteHow many different types of proteins have a human been adult?

20,000 ? and how many other molecules?

Thanks

Sorry by my english im from spain

How many proteins in the human proteome?

DeleteCell biology by the numbers:

ReplyDeletehttp://book.bionumbers.org

"The set of human coding genes needs to be as complete as possible for biomedical experiments, but inevitably some genes will be misannotated as coding."

ReplyDeletePresumably some genes will also be misannotated as non-coding. So I guess the question is which type of error would be considered more serious for medical experiments?

How long is a piece of string?

DeleteBoth are important, but require different strategies. The paper concentrated on coding genes that may not code for proteins. At the same time GENCODE manual annotators have added 137 new coding genes just in the last year, https://www.biorxiv.org/content/biorxiv/early/2018/07/02/360602.full.pdf).

However, I do think that at this moment there are many more misclassified coding genes in the human genome than there are missing coding genes.

In my experience, I find that dealing with too much rubbish (alternative transcripts, wrong protein-coding genes, bunch of non-coding genes, so many chip-seq peaks, etc, etc) makes you waste a lot of time and makes difficult to identify relevant signals.

DeleteFor example, when studying germline variants the ones of highest interest are usually the ones with highest impact on protein-coding. Well, these variants happen much more frequently in alternative transcripts that are very unreliable (and they happen because those transcripts are not under negative selection); investigating them is a waste of time (in my opinion).

Mutations that are medically relevant can occur in protein-coding genes, noncoding genes, other functional elements in the genome, and in junk DNA. We need to have an accurate picture of everything in the genome in order to interpret genetic variants that have phenotypic effects.

DeletePresumably whether a sequence is classified as coding or non-coding is going to affect 1. its probably of being sequenced as part of a diagnostic test and 2. the type of analyses any mutations within the sequence will be subjected to in looking for causal genotype-phenotype relationships. So has there been enough diagnostic whole genome sequencing done that one can estimate what fraction of disease causing mutations are identified by whole genome sequencing that would have been missed by whole exome sequencing? Along the same lines are there estimates of what fraction of mutations initially identified as variants of unknown significance are ultimately classified as benign?

DeleteA new paper that suggests the number of coding genes may be less than 20000.

ReplyDeletehttps://academic.oup.com/nar/advance-article/doi/10.1093/nar/gky587/5047265